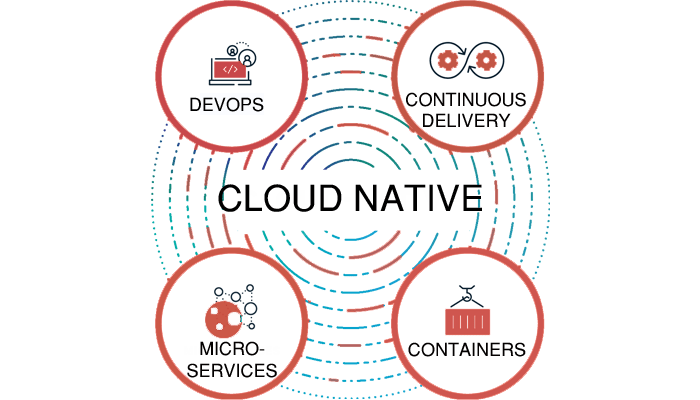

In our introductory and unboxing video, we mentioned that the NVIDIA Jetson Xavier NX is Cloud Native. The term “Cloud Native” may not be familiar to many, as it can considered to be quite a new paradigm of software development and deployment. What does it really mean to be Cloud Native? Particularly, for a piece of hardware, how does being Cloud Native help software developers with their work? In this article, we will discuss what it takes to be Cloud Native, and how the NVIDIA Jetson Xavier NX utilizes such an characteristic to its advantage.

Cloud Native – What is it?

If you search online for “definition of Cloud Native”, you probably wouldn’t get an obvious or direct answer. This is because, I would like to believe that the idea surrounding “Cloud Native” is yet another marketing term. It is a way for marketers to complicate a simple concept, so as to let people believe that their products are superior. There are some truth to that claim, but let’s just put it aside for now.

If I had the chance to define “What is a Cloud Native Software” for the layman, it would be:

A deployed Virtualized and Customized Software that is built by configuring multiple small and fully functioning software on the Cloud.

The definition could be overly simplified, but that’s probably all to it. In our definition above, there are a few key points. Let’s list them out in logical order:

(1) Small, fully functioning software – The building blocks of your final product

(2) Configuration – Putting the building blocks together

(3) Deployed Customized Software – Final Product

(1) Choosing your required sub-systems

Let me explain with an example. Let’s say that you plan to develop a new website using WordPress. The typical requirements of a WordPress installation are as follows:

(a) Web Server – Either Apache or Nginx

(b) Database – MySQL Server

(c) PHP version 7.3 or greater.

The traditional method of getting all of these in place, is to first download each respective component from their respective website, install it on the system that you intend to run on, and then actually configuring WordPress to run on these sub-systems.

If you realize, each sub-system is a fully functioning software by itself. WordPress simply uses each of the requirements features to get a larger part of it to work, and provide an actual application to users. This is a large characteristic of Cloud Native Software. It’s actually made up of multiple smaller software to achieve a larger goal, such as in this case – a fully functioning web application.

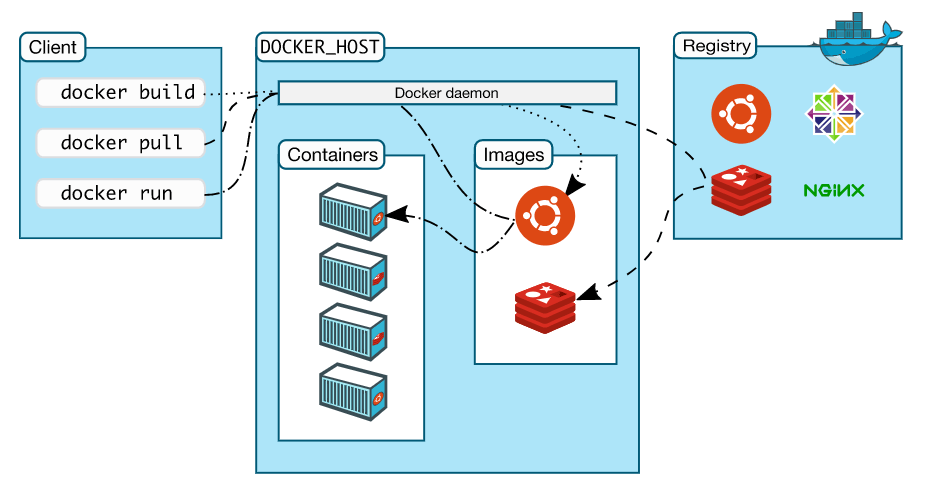

To make things even simpler, there usually isn’t a need to download every individual component of the sub-systems from their respective sites. All of these popular sub-system source codes are usually already hosted in a single repository, and retrieving them is as simple as finding out their name registered within the repository. These pieces of software are known as images; and as what it suggests, are actual copies of the working software that you would install in a typical machine.

(2) Configuring sub-systems to work together – Containerization

Of course, the next step is to put these smaller pieces of fully functioning software together, so that you can finally run your application. There comes the idea of containerization.

A container is a standard unit of software that packages up code and all its dependencies so that the application runs quickly and reliably from one computing environment to another : Definition of a container by Docker.

While the aforementioned images are static software and programming codes, a container is used to house those images. These containers are then executed and run together with various configurations. In fact, going back to our earlier example of building a WordPress application, retrieving a WordPress image from the repository will also automatically retrieve all its required dependencies as well, as the WordPress image has already been configured in that way.

While the aforementioned images are static software and programming codes, a container is used to house those images. These containers are then executed and run together with various configurations. In fact, going back to our earlier example of building a WordPress application, retrieving a WordPress image from the repository will also automatically retrieve all its required dependencies as well, as the WordPress image has already been configured in that way.

Instead of painstakingly installing subsystem software one by one, the whole process has just been shortened to a single line of code.

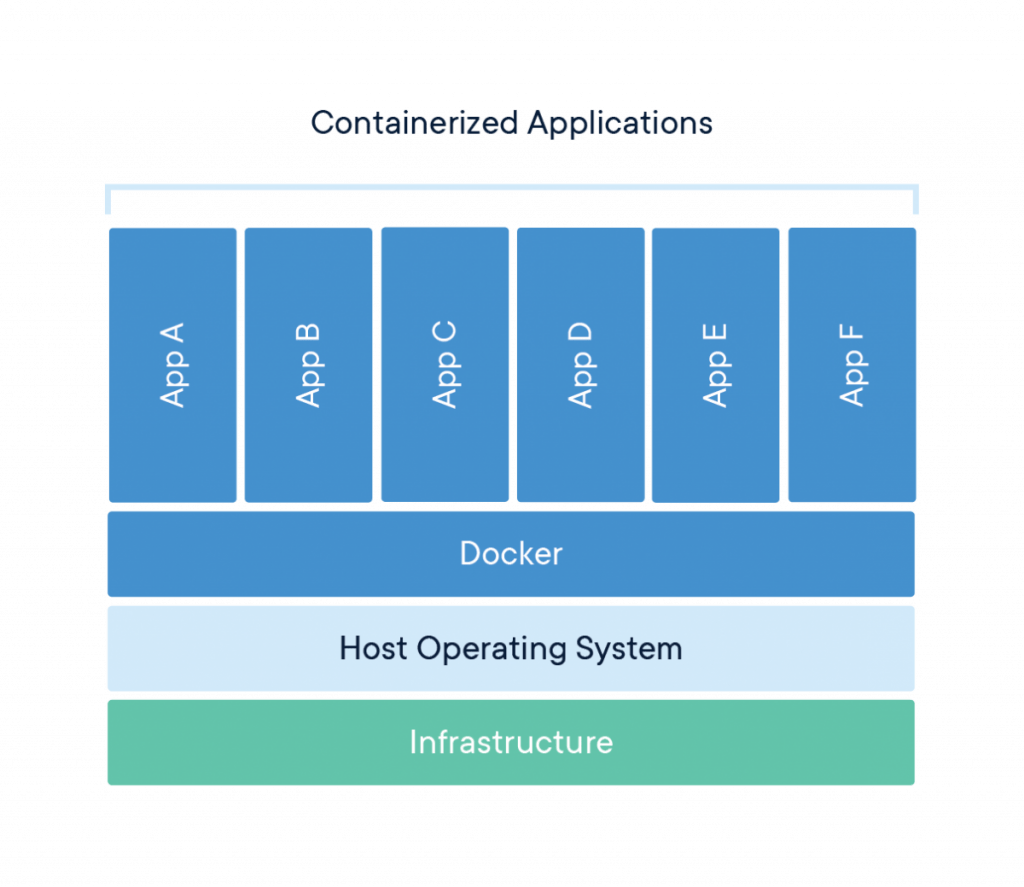

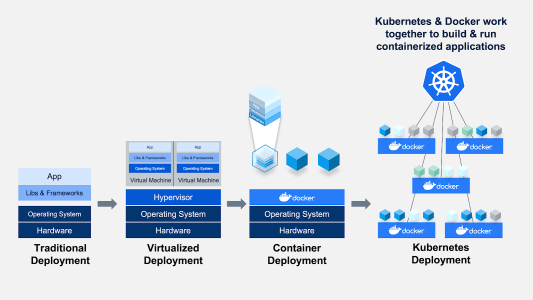

The retrieved images are then placed in a Virtualized Environment, which can then communicate with the underlying hardware. Once you have the containers running, the whole system is in fact already good to go.

(3) Deployment

Deployment of containers in their virtualized environments can be more complex that doing things in the traditional way, as hardware will also be virtualized. Thankfully, there are tools to make things easier to deploy containers.

These tools are usually known as Container Orchestrators. When it comes to deployment, Container Orchestrators can help to control and automate many tasks, such as:

- Provisioning and deployment of containers

- Redundancy and availability of containers

- Scaling up or removing containers to spread application load evenly across host infrastructure

- Movement of containers from one host to another if there is a shortage of resources in a host, or if a host dies

- Allocation of resources between containers

- External exposure of services running in a container with the outside world

- Load balancing of service discovery between containers

- Health monitoring of containers and hosts

- Configuration of an application in relation to the containers running it

Source: New Relic – Container Orchestration

With Container Orchestration in place, your application will be ready for the world. It should be able to perform reliably and scale accordingly to dynamic requirements.

Benefits of Cloud Native Applications

- Virtualization – Because Cloud Native Applications run in an virtualized environment, such as Docker, they can be easily deployed across different systems and machines without much effort. The hardware layer is kept abstract, and applications will be able to access them through virtualized hardware. That is, because of virtualization, Cloud Native Applications enables software system portability.

- Product Lifecycle – Containerization makes it a lot easier for system deployments. When it comes to Continuous Integration, i.e. pushing out new updates to your system, a new software images can be built and pushed directly to the repository. Containers can simply be updated with the new images and enjoy updated software. For Docker, it works with third party tools such as Travis, Jenkins and Wercker to get Continuous Integration methods simplified and streamlined.

- Ease of Installation – Cloud Native Applications uses different software images to build a larger working system. It gives flexibility to developers to make use of different well-established software systems, configure these systems, and use them without any problem. With virtualization, this same set of code can then be deployed to any operating system as the application environment is now no longer linked to a single infrastructure.

- Ease of Deployment – Containerization allows images to be deployed into containers within seconds. As each container can be spun up without the need for its individual Operating system, it greatly reduces the time to get individual systems up (when compared to traditional deployment methods).

- Security – Each container are completely separated and isolated from one another within the system. This allows complete control over the management of data across different containers.

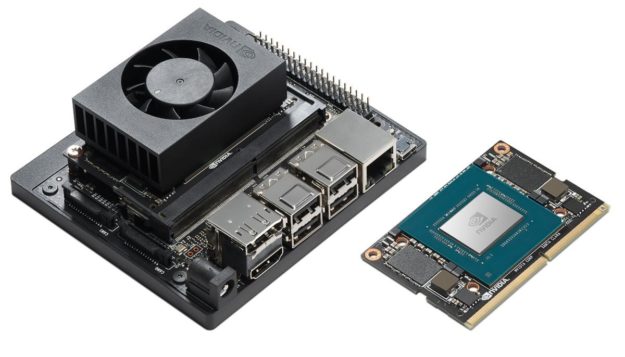

Bringing Cloud Native Characteristics to Edge Devices – NVIDIA Jetson Xavier NX

We’ve talked so much about being Cloud Native, but it seems like all these advances are only done of the Cloud. What has it got to do with the NVIDIA Jetson Xavier NX, which is in fact an edge device, and does not stay within the cloud? What has Cloud Native got to do with it?

This is where we have to thank hardware virtualization. As hardware is virtualized, it does not matter if you the platform you are using is on the cloud, an individual PC, a Single board computer or an Edge device such as the NVIDIA Jetson Xavier NX. The containers deployed on either of these platforms simply just work.

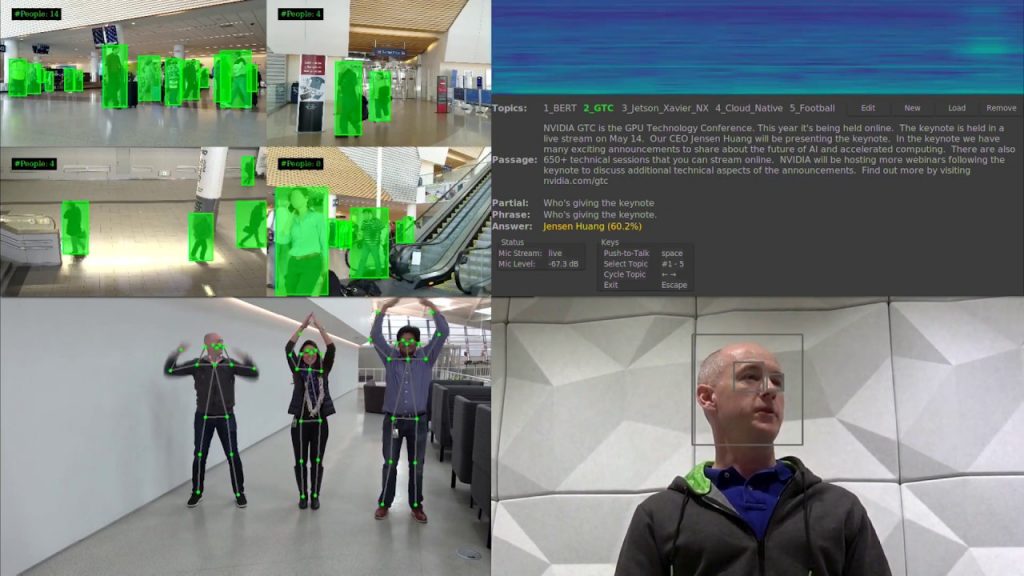

Specifically, to ensure that containers running AI applications which requires hardware level acceleration can also have access to the underlying hardware, NVIDIA has developed the NVIDIA container runtime for Docker. It’s like a software driver for containers.

As you know, NVIDIA GPUs and hardware are widely used for AI application development and deployment today. NVIDIA container runtime essentially brings Cloud Native support to all systems that has a GPU product that NVIDIA supports. This changes the whole paradigm of machine learning development.

Just like how it changed the way we would install a WordPress system from multiple steps to just a single line of code, NVIDIA container runtime allows that same level of simplicity to deploy AI applications to edge devices as well.

In our next article, we will demonstrate how used the NVIDIA Jetson Xavier NX to deploy multiple AI applications.