At AMD’s “Zen 5” Tech Day held last week, Chris Hall, and Nick Ni from AMD shares with us how we can evaluate and appreciate AI PCs and particularly, how AMD Ryzen AI PCs are able to perform meet today’s AI workload requirements. We also saw some demos showing us how AI workloads can be accelerated simultaneously on the AMD Ryzen AI 300 series PCs.

Evaluating AI PCs

AMD defines the AI PC Experience into four dimensions. They are (1) Performance, (2) AI Accuracy, (3) Memory Footprint and (4) Power Consumption.

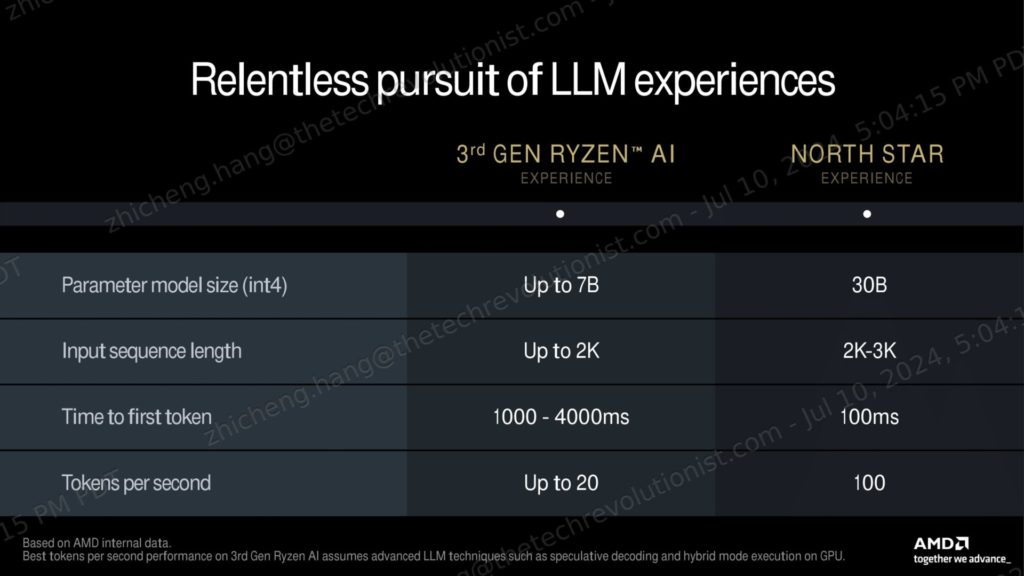

Specifically for the Performance metrics, in the case of evaluating Large Language Models (LLMs), there are a few key things to look out for. They are Time to First Token and Token per Second, which is typically a measure of responsiveness and throughput. However, there are also other considerations to be taken care of. One of the key concerns today is that there are no standardized measure for such performance.

A Balancing Act

Building AI applications on today’s AI PCs requires a careful balance between the various dimensions in order to provide the best possible experience to consumers. It’s ultimately based on the expectations of the users today, juggling between what’s important for them at that specific AI use case and scenario. For example, if we are asking an LLM to provide an answer to a certain question, we want it to reply accurately, rather than making things up. AI Accuracy becomes the most important dimension. However, we will need to compromise performance, because the context which we provide the LLM will take up more memory footprint and also result in slower responsiveness and lower throughput in the response provided.

AMD is working towards bringing even better AI PC experience to users. They are working towards the next generation Ryzen AI “North Star” product, which will provide even better performance in AI capabilities in order to bridge the limitations of the 3rd Gen Ryzen AI today.