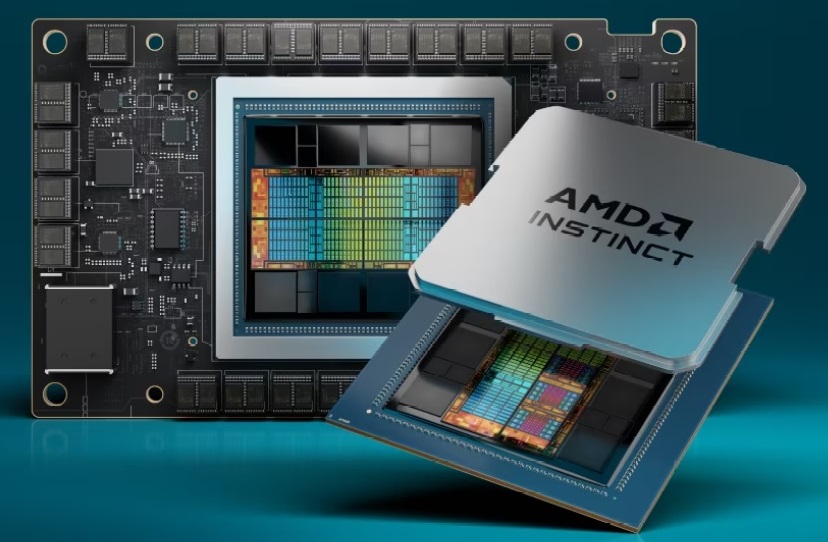

AMD is wrapping up the year with a simple message of “We want AI everywhere from top to bottom” with the release of the industrial-use AI accelerator Instinct MI300 series and the consumer-level Ryzen 8040 mobile APUs.

Let’s go for the Instinct MI300 first. As Team Red’s answer for the exponential growth of generative AI and LLM demands, they are offering 3 different and distinctive “models”.

For starters, the MI300A is actually an APU containing CPU cores and GPU CUs within a single package at 24 and 228. Capable of overperforming the previous MI250X by up to 1.9x, the MI300A will be the perfect choice for HPC and AI-combined workloads since it does both of them at the same time while cashing in the advantage of energy-efficient design.

Hosting both general computing and acceleration with unified memory and cache resources means everything can be managed and utilized with more control and effective utilization.

Next up, the MI300X has none of the APU business and is just simply an accelerator card with 304 CUs but hosts almost 70GB more HBM3 memory than the former which comes in handy for things like LLM training. It also supports FP8 and sparsity math formats for better “calculation-based” models.

Lastly, AMD is also introducing the Instinct Platform that combines the power of 8x MI300X into a single “rack” or “system” (whatever you want to call it) with their reference design accessible by OEM partners to make their own offerings and streamline the adoption of AMD Instinct accelerator-based servers.

The list of specs of the Instinct MI300 family is as follow.

| AMD Instinct | Architecture | GPU CUs | CPU Cores | Memory | Memory Bandwidth (Peak theoretical) |

Process Node | 3D Packaging w/ 4th Gen AMD Infinity Architecture |

| MI300A | AMD CDNA™ 3 | 228 | 24 “Zen 4” | 128GB HBM3 | 5.3 TB/s | 5nm / 6nm | Yes |

| MI300X | 304 | N/A | 192GB HBM3 | ||||

| Platform | 2,432 | 1.5 TB HMB3 | 5.3 TB/s per OAM |

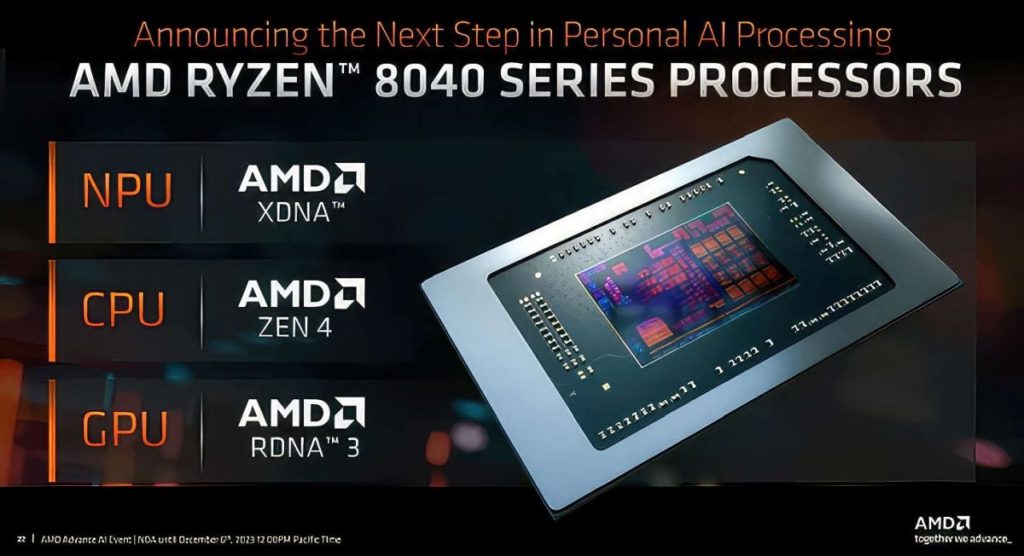

On the other hand, AMD is also gunning for “AI for everyone” in the form of the new Ryzen 8040 series mobile APUs not only come with the usual CPU+GPU within a single die but also the addition of NPU that helps in all sorts of AI-related workload acceleration.

With up to 9 new SKUs introduced to the market, only 7 out of those houses the new NPU while the duo at the very bottom of the tier list don’t get the same treatment.

Such new on-die AI-dedicated hardware promised to deliver up to 1.6x more processing capabilities than prior offerings while the ‘Zen-4’ CPU cores and ‘RDNA 3’ GPU continue to provide high value and balanced performance that covers a lot of grounds from daytime working to nighttime entertainment of games.

These new chips are also Windows 11 compatible out of the box and support things like on-the-go background blur, noise cancellation, eye tracking, and more.

Here’s a list of the SKUs mentioned.

|

Model |

Cores / Threads | Boost/Base Frequency | Total Cache | TDP |

NPU |

|

Ryzen 9 8945HS |

8C/16T | Up to 5.2 GHz / 4.0 GHz | 24MB | 45W |

Yes |

|

Ryzen 7 8845HS |

8C/16T |

Up to 5.1 GHz / 3.8 GHz |

|||

|

Ryzen 7 8840HS |

8C/16T |

Up to 5.1 GHz / 3.3 GHz |

28W |

||

|

Ryzen 7 8840U |

8C/16T |

Up to 5.1 GHz / 3.3 GHz |

|||

|

Ryzen 5 8645HS |

6C/12T |

Up to 5.0 GHz / 4.3 GHz |

22MB |

45W |

|

|

Ryzen 5 8640HS |

6C/12T |

Up to 4.9 GHz / 3.5 GHz |

28W |

||

| Ryzen 5 8640U |

6C/12T |

Up to 4.9 GHz / 3.5 GHz | |||

|

Ryzen 5 8540U |

6C/12T |

Up to 4.9 GHz / 3.2 GHz |

No |

||

|

Ryzen 3 8440U |

4C/8T | Up to 4.7 GHz / 3.0 GHz |

12MB |