First processors based on ARM DynamIQ

technology take a big step towards boosting AI performance by more than 50x

over the next 3-5 years

- ARM Cortex-A75 delivers massive single-thread compute uplift

for premium performance points - ARM Cortex-A55 is the world’s most versatile high-efficiency

processor - ARM Mali-G72 GPU expands VR, gaming, and Machine Learning

capabilities on premium mobile devices with 40% more performance - New supporting IP includes ARM Compute Library, comprehensive suite of developer tools

and POP IP

Artificial intelligence (AI) is already simplifying and transforming many of our lives and it seems that

every day I read about or see proofs of concept for potentially life-saving AI innovations. However, replicating the learning and

decision-making functions of the human brain starts with algorithms that often

require cloud-intensive compute power. Unfortunately, a cloud-centric approach

is not an optimal long-term solution if we want to make the life-changing

potential of AI ubiquitous and closer to the user for real-time inference and

greater privacy. In fact, survey data we will share in the coming weeks shows

85 percent of global consumers are concerned about securing AI technology, a

key indicator that more processing and storing of personal data on edge devices

is needed to instill a greater sense of confidence in AI privacy.

every day I read about or see proofs of concept for potentially life-saving AI innovations. However, replicating the learning and

decision-making functions of the human brain starts with algorithms that often

require cloud-intensive compute power. Unfortunately, a cloud-centric approach

is not an optimal long-term solution if we want to make the life-changing

potential of AI ubiquitous and closer to the user for real-time inference and

greater privacy. In fact, survey data we will share in the coming weeks shows

85 percent of global consumers are concerned about securing AI technology, a

key indicator that more processing and storing of personal data on edge devices

is needed to instill a greater sense of confidence in AI privacy.

Enabling secure and ubiquitous AI is a

fundamental guiding design principle for ARM considering our technologies

currently reach 70 percent of the global population. As such, ARM has a

responsibility to rearchitect the compute experience for AI and other

human-like compute experiences. To do this, we need to enable faster, more

efficient, and secure distributed intelligence between computing at the edge of

the network and into the cloud.

fundamental guiding design principle for ARM considering our technologies

currently reach 70 percent of the global population. As such, ARM has a

responsibility to rearchitect the compute experience for AI and other

human-like compute experiences. To do this, we need to enable faster, more

efficient, and secure distributed intelligence between computing at the edge of

the network and into the cloud.

ARM DynamIQ technology, which we initially previewed back in

March, was the first milestone on the path to distributing intelligence from

chip to cloud. Today we hit another key milestone, launching our first products

based on DynamIQ technology, the ARM Cortex-A75 and Cortex-A55 processors. Both

processors include:

March, was the first milestone on the path to distributing intelligence from

chip to cloud. Today we hit another key milestone, launching our first products

based on DynamIQ technology, the ARM Cortex-A75 and Cortex-A55 processors. Both

processors include:

• Dedicated instructions for AI performance

tasks via DynamIQ technology, setting ARM on a trajectory to deliver 50x AI

performance increases over the next 3-5 years

tasks via DynamIQ technology, setting ARM on a trajectory to deliver 50x AI

performance increases over the next 3-5 years

• Increased multi-core functionality and

flexibility in a single compute cluster with DynamIQ big.LITTLE

flexibility in a single compute cluster with DynamIQ big.LITTLE

• The secure foundation for billions of

devices, ARM TrustZone technology, to fortify the SoC in edge devices

devices, ARM TrustZone technology, to fortify the SoC in edge devices

• Increased functional safety capabilities

for ADAS and autonomous driving

for ADAS and autonomous driving

To further optimize SoCs for distributed

intelligence and device-based Machine Learning (ML), we are also launching the

latest premium version of the world’s No. 1 shipping GPU, the Mali-G72. The new

Mali-G72 graphics processor, based

on the Bifrost architecture, is designed for the new and demanding use cases of

ML on device, as well as High Fidelity mobile gaming and mobile VR.

intelligence and device-based Machine Learning (ML), we are also launching the

latest premium version of the world’s No. 1 shipping GPU, the Mali-G72. The new

Mali-G72 graphics processor, based

on the Bifrost architecture, is designed for the new and demanding use cases of

ML on device, as well as High Fidelity mobile gaming and mobile VR.

Cortex-A75: Breakthrough single-threaded

performance

performance

I have been at ARM for more than a dozen

years and can’t remember being this excited about a product delivering such a

boost to single-threaded performance without compromising our efficiency

leadership. The Cortex-A75 delivers a massive 50 percent uplift in performance

and greater multicore capabilities, enabling our partners to address

high-performance use cases including laptops, networking, and servers, all within

a smartphone power profile. Additional performance data and a deep dive on

technical features can be found in this blog from Stefan Rosinger.

years and can’t remember being this excited about a product delivering such a

boost to single-threaded performance without compromising our efficiency

leadership. The Cortex-A75 delivers a massive 50 percent uplift in performance

and greater multicore capabilities, enabling our partners to address

high-performance use cases including laptops, networking, and servers, all within

a smartphone power profile. Additional performance data and a deep dive on

technical features can be found in this blog from Stefan Rosinger.

Cortex-A55: The new industry-leader in

high-efficiency processing

high-efficiency processing

SoCs based on

Cortex-A53 came to market in 2013 and since then ARM partners have shipped a

staggering 1.5 billion units, and that volume is continuing to grow rapidly.

That’s an extremely high bar for any follow-on product to surpass. Yet, the

Cortex-A55 is not your typical follow-on product. With

dedicated AI instructions and up to 2.5x the performance-per-milliwatt

efficiency relative to today’s Cortex-A53 based

devices, the Cortex-A55 is the world’s most versatile high-efficiency

processor. For more performance data and technical details,

visit this blog from Govind Wathan.

Cortex-A53 came to market in 2013 and since then ARM partners have shipped a

staggering 1.5 billion units, and that volume is continuing to grow rapidly.

That’s an extremely high bar for any follow-on product to surpass. Yet, the

Cortex-A55 is not your typical follow-on product. With

dedicated AI instructions and up to 2.5x the performance-per-milliwatt

efficiency relative to today’s Cortex-A53 based

devices, the Cortex-A55 is the world’s most versatile high-efficiency

processor. For more performance data and technical details,

visit this blog from Govind Wathan.

Flexible big.LITTLE performance for more

everyday devices

everyday devices

When distributing intelligence from the

edge to the cloud, there are a diverse spectrum of compute needs to consider.

DynamIQ big.LITTLE provides more multi-core flexibility across more tiers of

performance and user experiences by enabling the configuration of big and

LITTLE processors on a single compute cluster for the first time.

edge to the cloud, there are a diverse spectrum of compute needs to consider.

DynamIQ big.LITTLE provides more multi-core flexibility across more tiers of

performance and user experiences by enabling the configuration of big and

LITTLE processors on a single compute cluster for the first time.

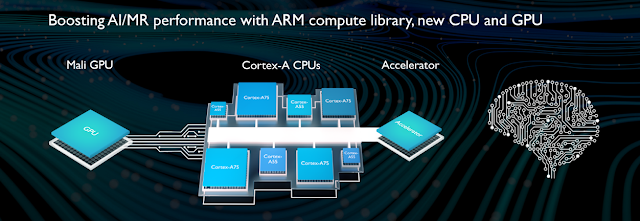

The flexibility of DynamIQ big.LITTLE is

at the heart of the system-level approach distributed intelligence requires.

The combination of flexible CPU clusters, GPU compute technology, dedicated

accelerators, and the new ARM Compute Library work together to efficiently enhance and

scale AI performance. The free, open-source ARM Compute Library is a collection

of low-level software functions optimized for Cortex CPU and Mali GPU

architectures. This is just the latest example of ARM’s commitment to investing

more in software to get the most performance out of hardware without compromising

efficiency. On the CPU alone, ARM Compute Library can boost performance of AI

and ML workloads by 10x-15x on both new and existing ARM-based SoCs.

at the heart of the system-level approach distributed intelligence requires.

The combination of flexible CPU clusters, GPU compute technology, dedicated

accelerators, and the new ARM Compute Library work together to efficiently enhance and

scale AI performance. The free, open-source ARM Compute Library is a collection

of low-level software functions optimized for Cortex CPU and Mali GPU

architectures. This is just the latest example of ARM’s commitment to investing

more in software to get the most performance out of hardware without compromising

efficiency. On the CPU alone, ARM Compute Library can boost performance of AI

and ML workloads by 10x-15x on both new and existing ARM-based SoCs.

Mali-G72: Optimized for next-generation,

real-world content

real-world content

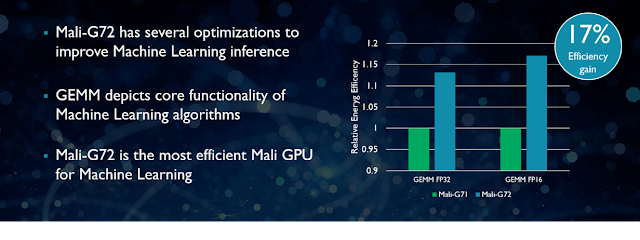

Our system-level approach enables

innovation across multiple blocks of compute IP, including the GPU. The

Mali-G72 GPU builds on the success of its predecessor, the Mali-G71. The

Bifrost architecture enhancements boost the performance by up to 40 percent in

the Mali-G72, enabling our partners to advance the mobile VR experience and

push High Fidelity mobile gaming into the next realm. We have also designed the

Mali-G72 to provide for the most efficient and performant ML thanks to

arithmetic optimizations and increased caches, thus reducing bandwidth for a 17

percent ML efficiency gain.

innovation across multiple blocks of compute IP, including the GPU. The

Mali-G72 GPU builds on the success of its predecessor, the Mali-G71. The

Bifrost architecture enhancements boost the performance by up to 40 percent in

the Mali-G72, enabling our partners to advance the mobile VR experience and

push High Fidelity mobile gaming into the next realm. We have also designed the

Mali-G72 to provide for the most efficient and performant ML thanks to

arithmetic optimizations and increased caches, thus reducing bandwidth for a 17

percent ML efficiency gain.

With 25 percent higher energy efficiency,

20 percent better performance density, and the new machine learning

optimizations, ARM can distribute intelligence more efficiently across the SoC.

To read additional technical details on the Mali-G72, visit this blog by Freddi Jeffries.

20 percent better performance density, and the new machine learning

optimizations, ARM can distribute intelligence more efficiently across the SoC.

To read additional technical details on the Mali-G72, visit this blog by Freddi Jeffries.

Distributed intelligence starts here

Today we’ve announced the next generation

of CPU and GPU IP engines designed to power the most advanced compute. The

image below represents the most optimized ARM-based SoC for your edge device. A

full suite of compute, media, display, security and system IP designed and validated together to deliver the highest-performing

and most efficient mobile compute experience. This suite of IP is supported by a range

of new System Guidance for Mobile (SGM-775) which includes everything from SoC

architecture to detailed pre-silicon analysis documentation, models, and

software – all available for free to ARM partners. For accelerated time-to-market

and optimized implementations to ensure highest performance and efficency, ARM

POP IP is available for Cortex-A75.

of CPU and GPU IP engines designed to power the most advanced compute. The

image below represents the most optimized ARM-based SoC for your edge device. A

full suite of compute, media, display, security and system IP designed and validated together to deliver the highest-performing

and most efficient mobile compute experience. This suite of IP is supported by a range

of new System Guidance for Mobile (SGM-775) which includes everything from SoC

architecture to detailed pre-silicon analysis documentation, models, and

software – all available for free to ARM partners. For accelerated time-to-market

and optimized implementations to ensure highest performance and efficency, ARM

POP IP is available for Cortex-A75.

Leading software

ecosystem, from edge to cloud

ecosystem, from edge to cloud

Software

is central to future highly-efficient and secure distributed intelligence. The

ARM ecosystem is uniquely positioned to deliver the breadth of disruptive software

innovation required to kickstart the AI revolution. To further support our

latest CPU and GPU IP, we are also releasing a complete software development

environment. Our ecosystem now has the opportunity to develop software

optimized for DynamIQ ahead of hardware availability through a combination of

ARM virtual prototypes and DS-5 Development Studio.

is central to future highly-efficient and secure distributed intelligence. The

ARM ecosystem is uniquely positioned to deliver the breadth of disruptive software

innovation required to kickstart the AI revolution. To further support our

latest CPU and GPU IP, we are also releasing a complete software development

environment. Our ecosystem now has the opportunity to develop software

optimized for DynamIQ ahead of hardware availability through a combination of

ARM virtual prototypes and DS-5 Development Studio.

As

ARM prepares to work with its partners to ship 100 billion chips over the next

five years, we are more agile than ever in enabling our ecosystem to guide the

transformation from a physical computing world into a more natural computing

world that’s always-on, intuitive and of course, intelligent. Today’s launch

puts us one step closer to our vision of Total Computing and transforming

intelligent solutions everywhere compute happens.

ARM prepares to work with its partners to ship 100 billion chips over the next

five years, we are more agile than ever in enabling our ecosystem to guide the

transformation from a physical computing world into a more natural computing

world that’s always-on, intuitive and of course, intelligent. Today’s launch

puts us one step closer to our vision of Total Computing and transforming

intelligent solutions everywhere compute happens.

For the LATEST tech updates,

FOLLOW us on our Twitter

LIKE us on our FaceBook

SUBSCRIBE to us on our YouTube Channel!