At COMPUTEX 2025, Arm’s Senior Vice President and General Manager of the Client Line of Business, Chris Bergey, delivered a compelling keynote titled “From Cloud to Edge: Advancing AI on Arm, Together.” The session underscored Arm’s strategic vision to enable artificial intelligence (AI) across the entire computing spectrum, from hyperscale cloud environments to energy-efficient edge devices.

AI’s Transformative Impact on Technology

Bergey emphasized that AI is no longer a nascent concept but a transformative force reshaping industries. Over the past 18 months, the emergence of more than 150 foundational models, many achieving over 90% accuracy, has accelerated the adoption of AI across various sectors. This rapid evolution necessitates a reevaluation of infrastructure to support increasingly complex and diverse workloads.

Edge Computing: The New Frontier for AI

The keynote highlighted the shift of AI workloads from centralized cloud systems to decentralized edge devices. Arm’s architecture facilitates this transition by providing the necessary performance and power efficiency for on-device AI processing. Applications such as smart eyewear, AI-powered personal computers, and autonomous vehicles exemplify the potential of edge computing to deliver low-latency, high-efficiency AI experiences.

Arm’s Ascendancy in Cloud Infrastructure

Arm’s influence extends into the cloud domain, with its architecture underpinning a significant portion of modern data center operations. Notably, over 50% of Amazon Web Services’ (AWS) new CPU deployments in the past two years have been based on Arm’s Graviton processors. Other major cloud providers, including Microsoft (Cobalt), Google (Axion), Oracle (in partnership with Ampere), and Alibaba (Yitian), have also adopted custom Arm-based solutions. These platforms benefit from up to 40% improvements in power efficiency, addressing the escalating energy demands of AI-centric data centers.

Architectural Innovations for AI Workloads

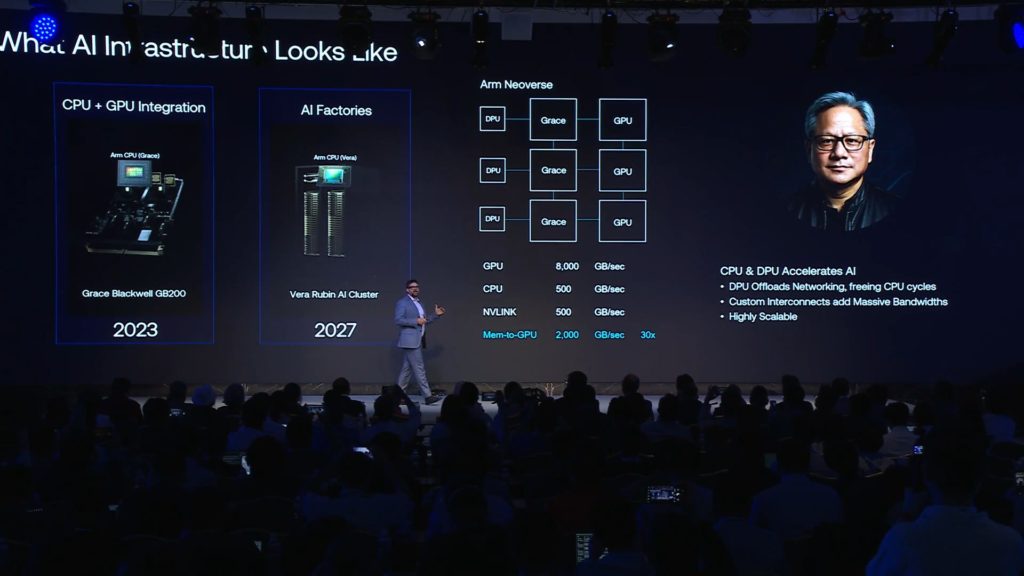

Traditional server architectures are increasingly inadequate for the demands of AI workloads, which require high throughput and efficient data processing. Arm addresses these challenges through co-designed infrastructures that tightly integrate CPUs, GPUs, and DPUs. Collaborations with industry leaders like NVIDIA have resulted in advanced platforms such as the Grace Hopper and Grace Blackwell superchips, as well as the Vera Rubin AI cluster, all leveraging Arm-based CPUs to optimize performance and efficiency.

Traditional server architectures are increasingly inadequate for the demands of AI workloads, which require high throughput and efficient data processing. Arm addresses these challenges through co-designed infrastructures that tightly integrate CPUs, GPUs, and DPUs. Collaborations with industry leaders like NVIDIA have resulted in advanced platforms such as the Grace Hopper and Grace Blackwell superchips, as well as the Vera Rubin AI cluster, all leveraging Arm-based CPUs to optimize performance and efficiency.

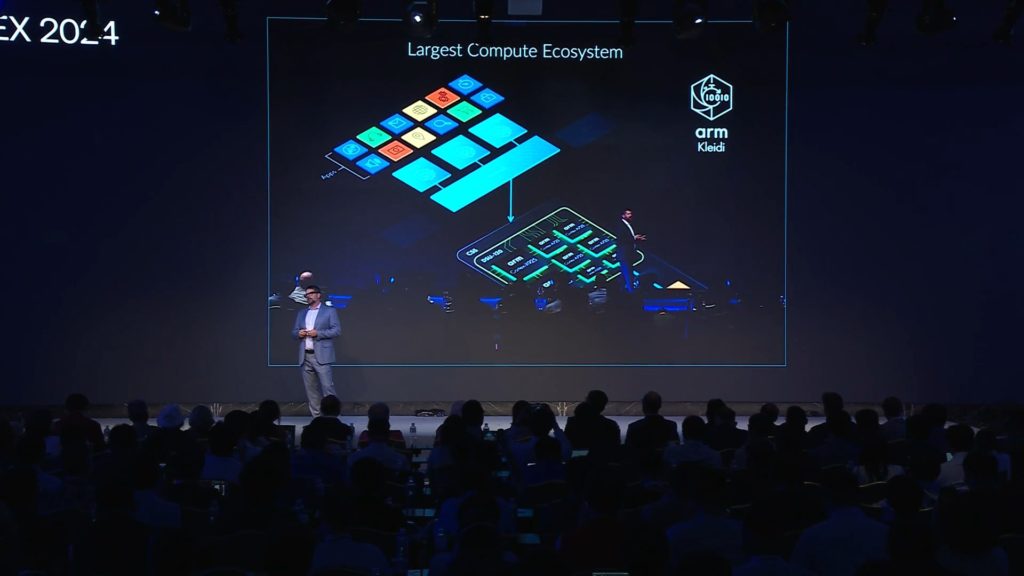

Kleidi: Enhancing AI Software Performance

A pivotal component of Arm’s AI strategy is its investment in software optimization. The Kleidi suite of libraries, introduced in 2023, enhances AI workload performance on Arm-based CPUs by integrating with leading AI frameworks. As of 2025, Kleidi supports:

-

ONNX Runtime (Microsoft)

-

TensorFlow Lite RT (Google)

-

Meta’s ExecuTorch

-

Tencent’s Hunyuan framework

These integrations enable developers to efficiently deploy AI applications across a broad range of devices, from cloud servers to edge hardware.

Conclusion

Conclusion

Arm’s presentation at COMPUTEX 2025 delineates a comprehensive approach to AI deployment, emphasizing the importance of scalable, efficient, and collaborative solutions. By fostering advancements in both hardware and software, and by engaging with a global ecosystem of partners, Arm is poised to play a central role in the ongoing evolution of AI technologies.

Conclusion

Conclusion