NVIDIA has unveiled the new Spectrum-XGS Ethernet, a new networking technology designed to link distributed data centers into unified AI super-factories at giga-scale.

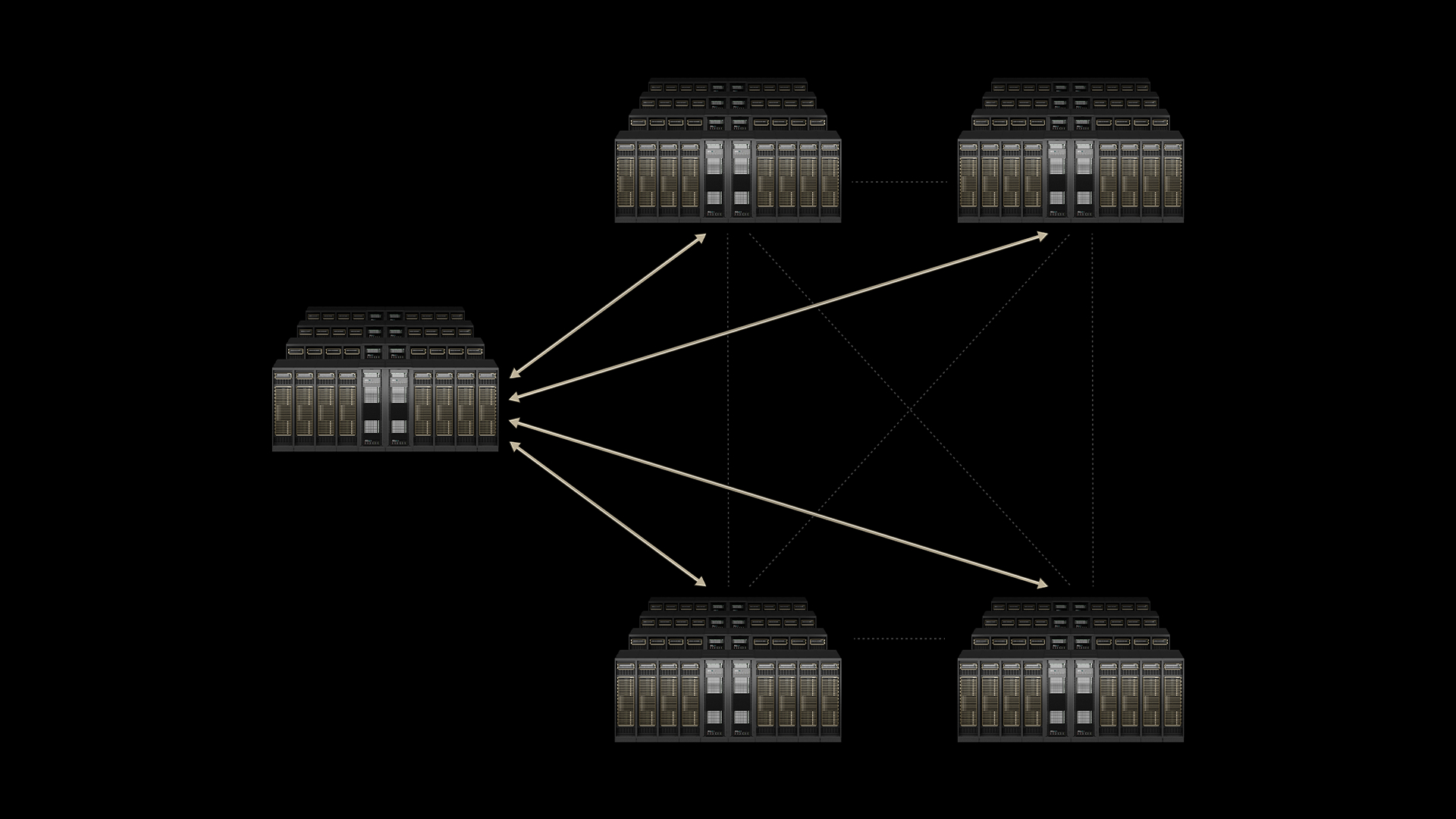

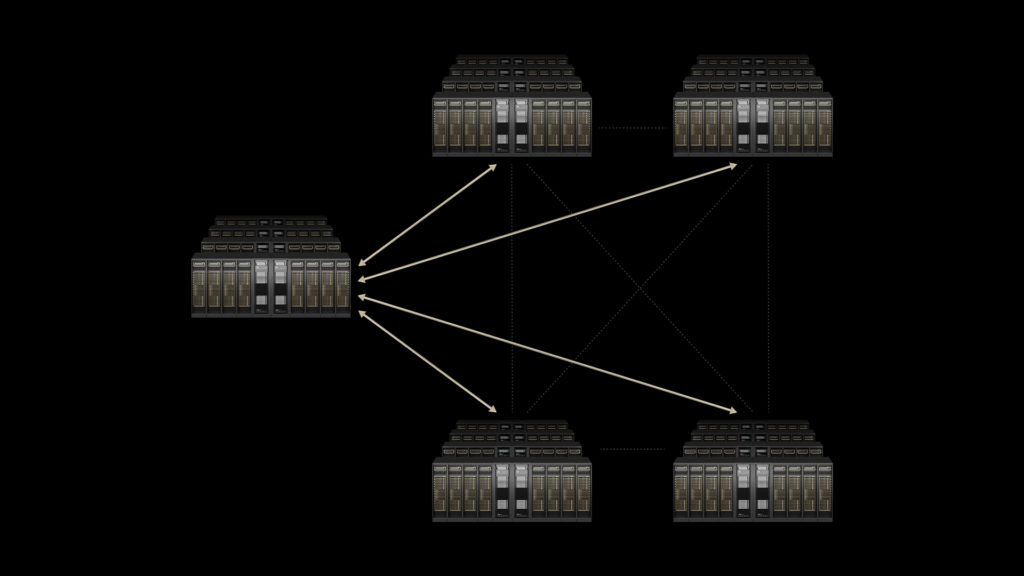

As AI demand continues to surge, single data centers are reaching their limits in power and capacity, while traditional off-the-shelf Ethernet struggles with latency, jitter, and inconsistent performance. On the contrary, Spectrum-XGS introduces what NVIDIA calls “scale-across” infrastructure, extending the company’s Spectrum-X Ethernet platform to interconnect multiple facilities across cities, nations, and continents.

As AI demand continues to surge, single data centers are reaching their limits in power and capacity, while traditional off-the-shelf Ethernet struggles with latency, jitter, and inconsistent performance. On the contrary, Spectrum-XGS introduces what NVIDIA calls “scale-across” infrastructure, extending the company’s Spectrum-X Ethernet platform to interconnect multiple facilities across cities, nations, and continents.

The system integrates advanced congestion control, precision latency management, and adaptive algorithms that nearly double the performance of NVIDIA’s Collective Communications Library. This enables multi-GPU and multi-node communication to remain predictable even across long distances.

Early adopters include CoreWeave, which plans to connect its data centers with Spectrum-XGS. “With NVIDIA Spectrum-XGS, we can connect our data centers into a single, unified supercomputer, giving our customers access to giga-scale AI,” said Peter Salanki, CoreWeave’s cofounder and CTO.

“The AI industrial revolution is here, and giant-scale AI factories are the essential infrastructure,” said Jensen Huang, founder and CEO of NVIDIA. He noted that Spectrum-XGS adds scale-across capabilities to the existing scale-up and scale-out approaches, making it possible for geographically distant data centers to operate as one.

The launch builds on NVIDIA’s momentum in networking, following Spectrum-X and Quantum-X silicon photonics switches that aim to connect millions of GPUs while cutting energy use and operational costs. Industry-wide, the move underscores how hyperscale operators are preparing for a future where AI infrastructure must expand beyond the confines of any one facility.

Availability

Spectrum-XGS Ethernet is available now as part of the NVIDIA Spectrum-X Ethernet platform.