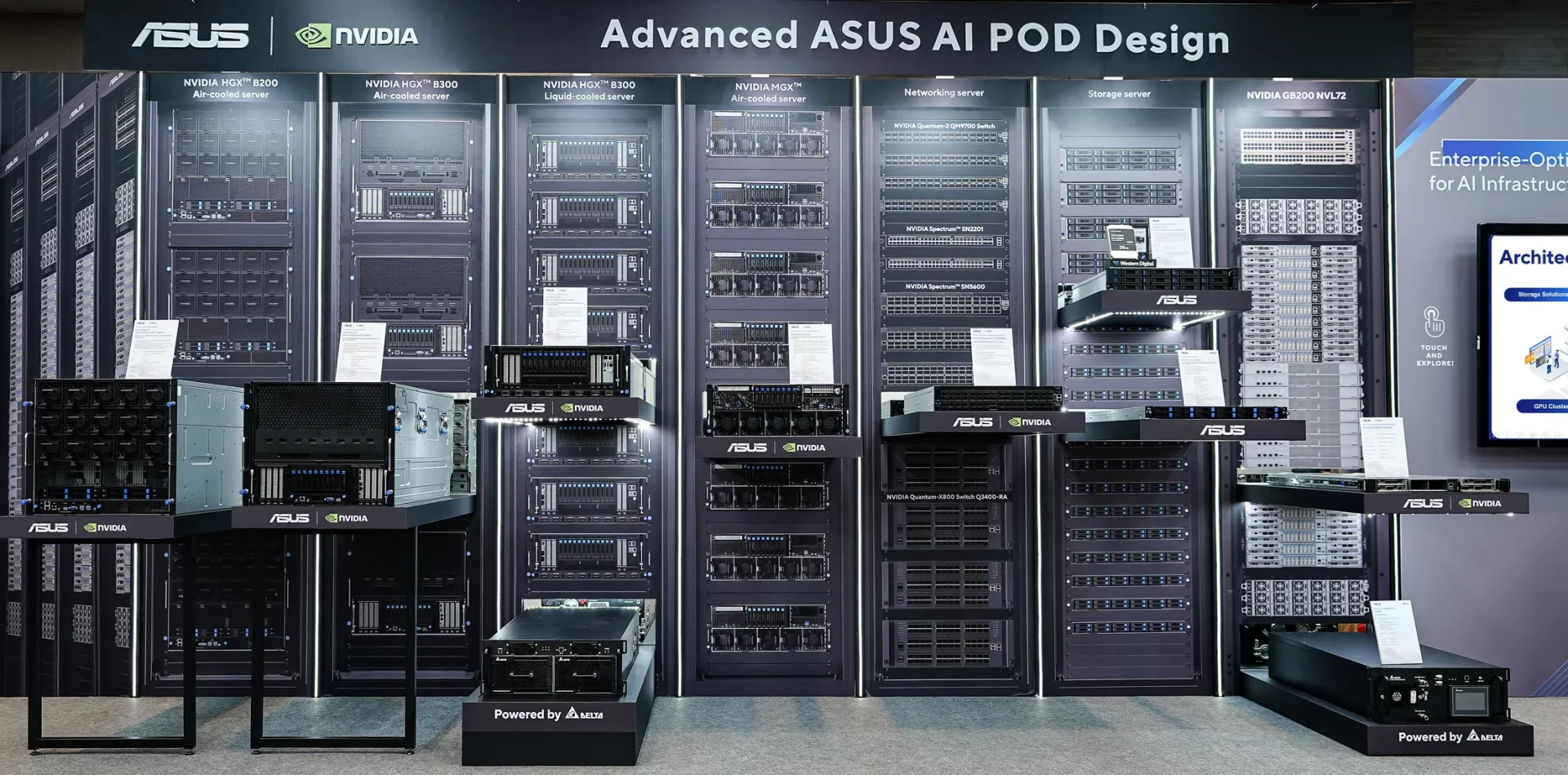

At COMPUTEX 2025, ASUS introduced a new wave of enterprise AI infrastructure built around the NVIDIA Enterprise AI Factory validated design, combining next-gen ASUS AI POD systems with optimized reference architectures. These solutions are available as NVIDIA-Certified Systems across Grace Blackwell, HGX, and MGX platforms, offering both air- and liquid-cooled configurations.

The ASUS AI POD is purpose-built to accelerate agentic AI adoption—AI that can learn and act autonomously—with support for large-scale HPC, physical AI, and enterprise LLM workloads. Highlighted features include an ultra-dense liquid-cooled cluster supporting 576 GPUs across eight racks, and a single-rack 72-GPU air-cooled option, both designed for maximum scalability and thermal efficiency.

The ASUS AI POD is purpose-built to accelerate agentic AI adoption—AI that can learn and act autonomously—with support for large-scale HPC, physical AI, and enterprise LLM workloads. Highlighted features include an ultra-dense liquid-cooled cluster supporting 576 GPUs across eight racks, and a single-rack 72-GPU air-cooled option, both designed for maximum scalability and thermal efficiency.

ASUS’s AI-ready racks also comply with NVIDIA’s MGX framework, integrating dual Intel Xeon 6 CPUs and RTX PRO 6000 Blackwell GPUs, alongside NVIDIA ConnectX-8 SuperNICs capable of up to 800Gb/s networking. These are paired with the NVIDIA AI Enterprise software suite to enable high-throughput, full-stack performance across AI pipelines.

The company’s HGX-based reference architecture featuring the ASUS XA NB3I-E12 and ESC NB8-E11 further enhances deployment flexibility with liquid- or air-cooled solutions for high-density GPU support optimized for fine-tuning, inference, and training, while keeping TCO and deployment time in check.

Aside from high-speed networking with NVIDIA Quantum InfiniBand and Spectrum-X Ethernet, the solution also comes with SUS Control Center (Data Center Edition) and ASUS Infrastructure Deployment Center (AIDC), along with advanced workload scheduling and fabric management with their L11/L12-validated designs aim to streamline and standardize enterprise-level AI rollouts.

With a full-stack approach that combines hardware, software, and validated design architectures, ASUS is reinforcing its role as a key enabler of next-generation AI infrastructure, delivering solutions that scale with the evolving demands of AI factories worldwide.