Four years ago, NVIDIA released both the Jetson Nano System on Module and the associated developer kit, showing us how such a small computing unit is still able to efficiently execute AI inferencing. It has brought edge AI computing to the center stage, which was once known as a rather difficult thing to do without proper hardware acceleration. The NVIDIA Jetson Nano’s AI compute capabilities sprouted a substantial number of new edge AI projects across industries, and was the choice for many educators to teach students regarding AI technologies. Furthermore, thanks to the great support and resources on the NVIDIA site and forums, developing on the NVIDIA Jetson Nano was also made simple.

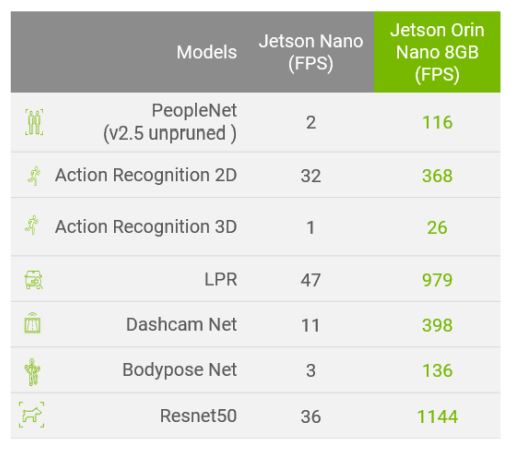

However, developers wanted more. With the evergrowing possibilities of AI applications, many wished for an improved Jetson Nano with better AI compute capabilities, so that they can realize their projects. That is why NVIDIA has recently released the NVIDIA Jetson Orin Nano Developer Kit, which promises 80x AI performance as compared to the original Jetson Nano, all in the same form factor.

The NVIDIA Jetson Orin Nano

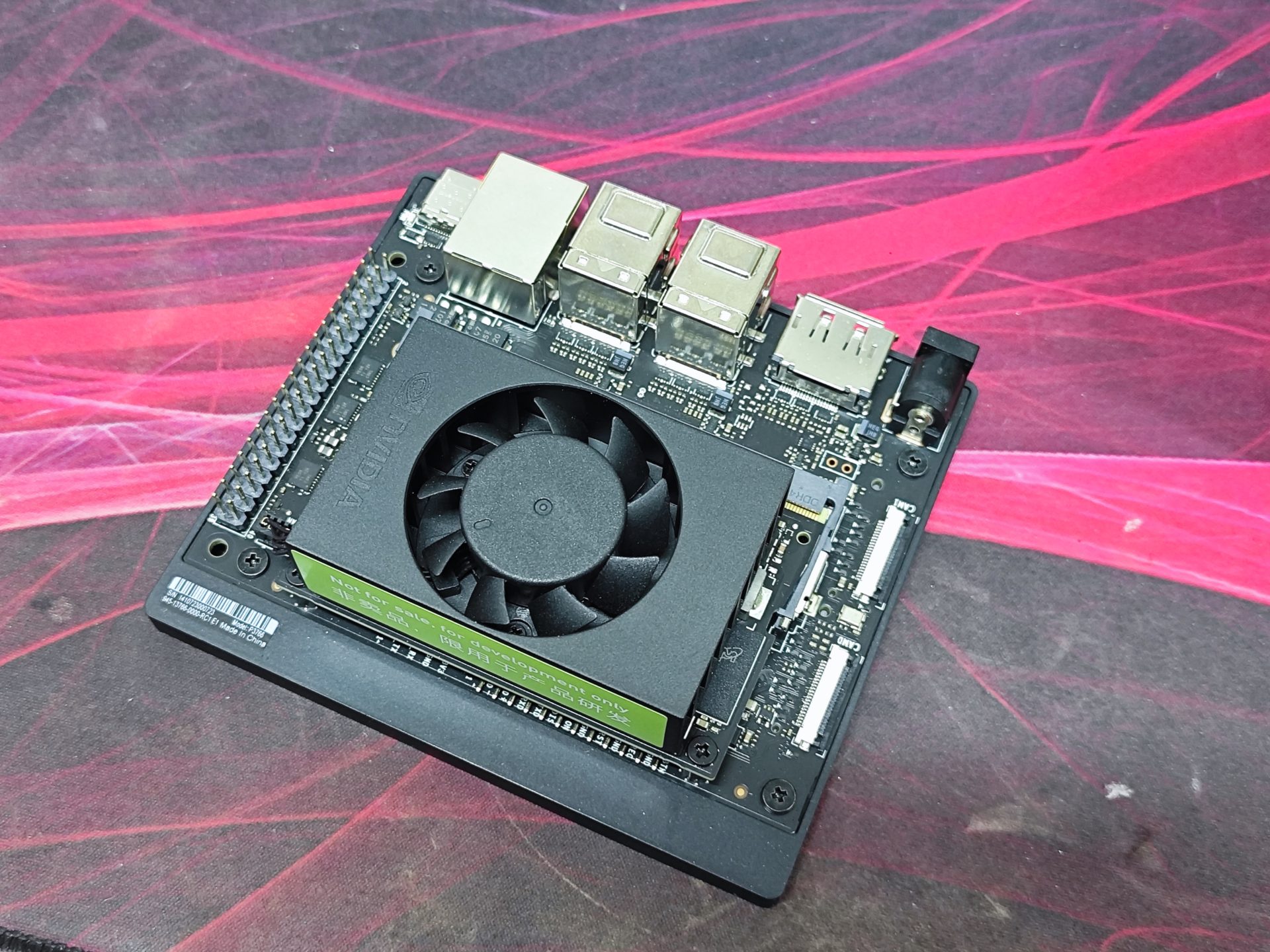

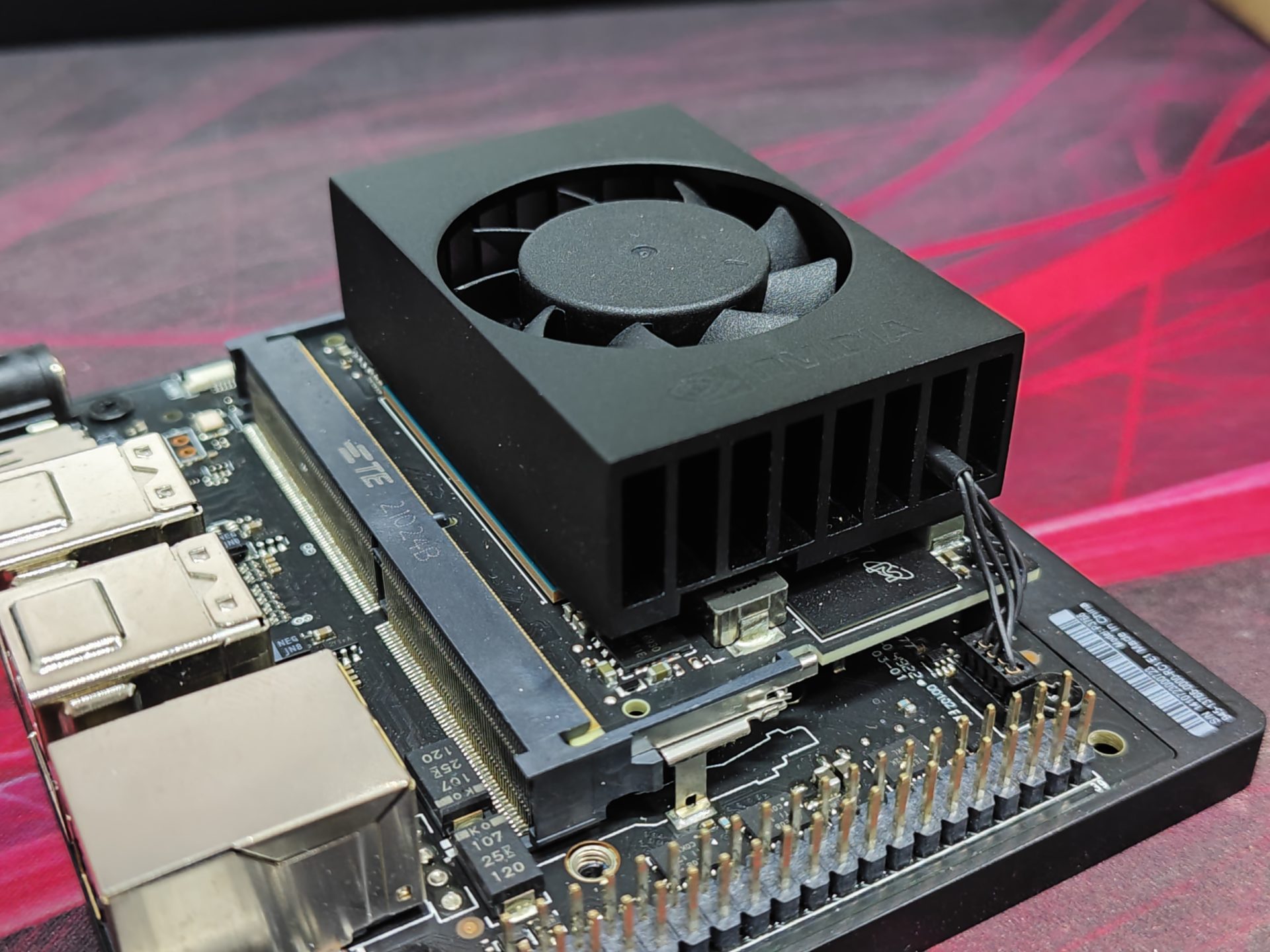

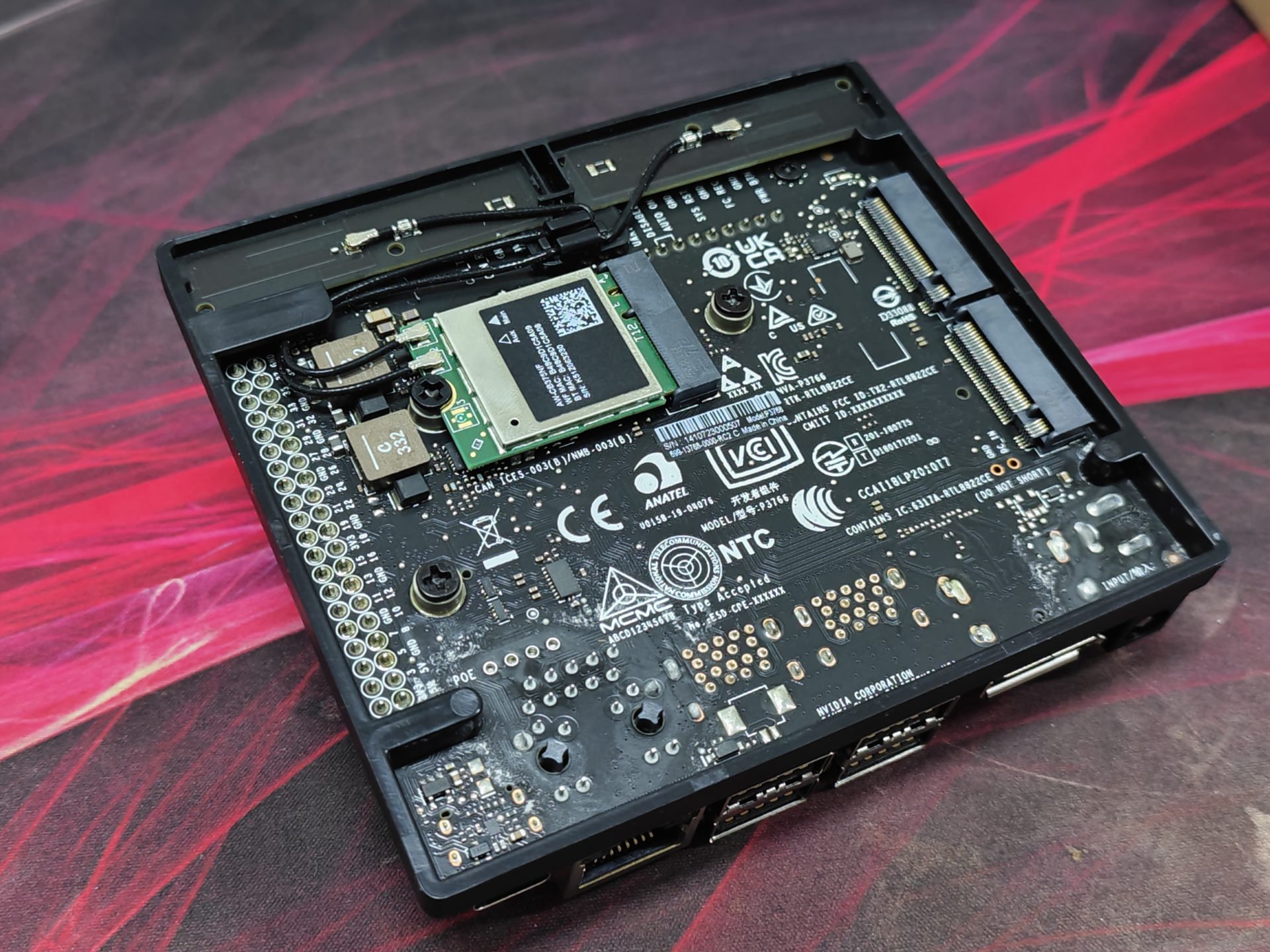

The NVIDIA Jetson Orin Nano’s key difference comes in its new and improved GPU. It is now equipped with an NVIDIA Ampere based GPU with 1024 NVIDIA CUDA cores and 32 Tensor cores. This is a vast improvement compared to the Maxwell based GPU from the Jetson Nano. While commercially, the Jetson Orin Nano is going to be implemented with its System on Module attached onto custom boards for various applications, NVIDIA has introduced the developer kit with a full range of IO connectors to simplify the development process.

| Module | Orin Nano 8GGB Module |

| GPU | NVIDIA Ampere architecture with 1024 NVIDIA® CUDA® cores and 32 Tensor cores |

| CPU | 6-core Arm Cortex-A78AE v8.2 64-bit CPU 1.5MB L2 + 4MB L3 |

| Memory | 8GB 128-bit LPDDR5 68 GB/s |

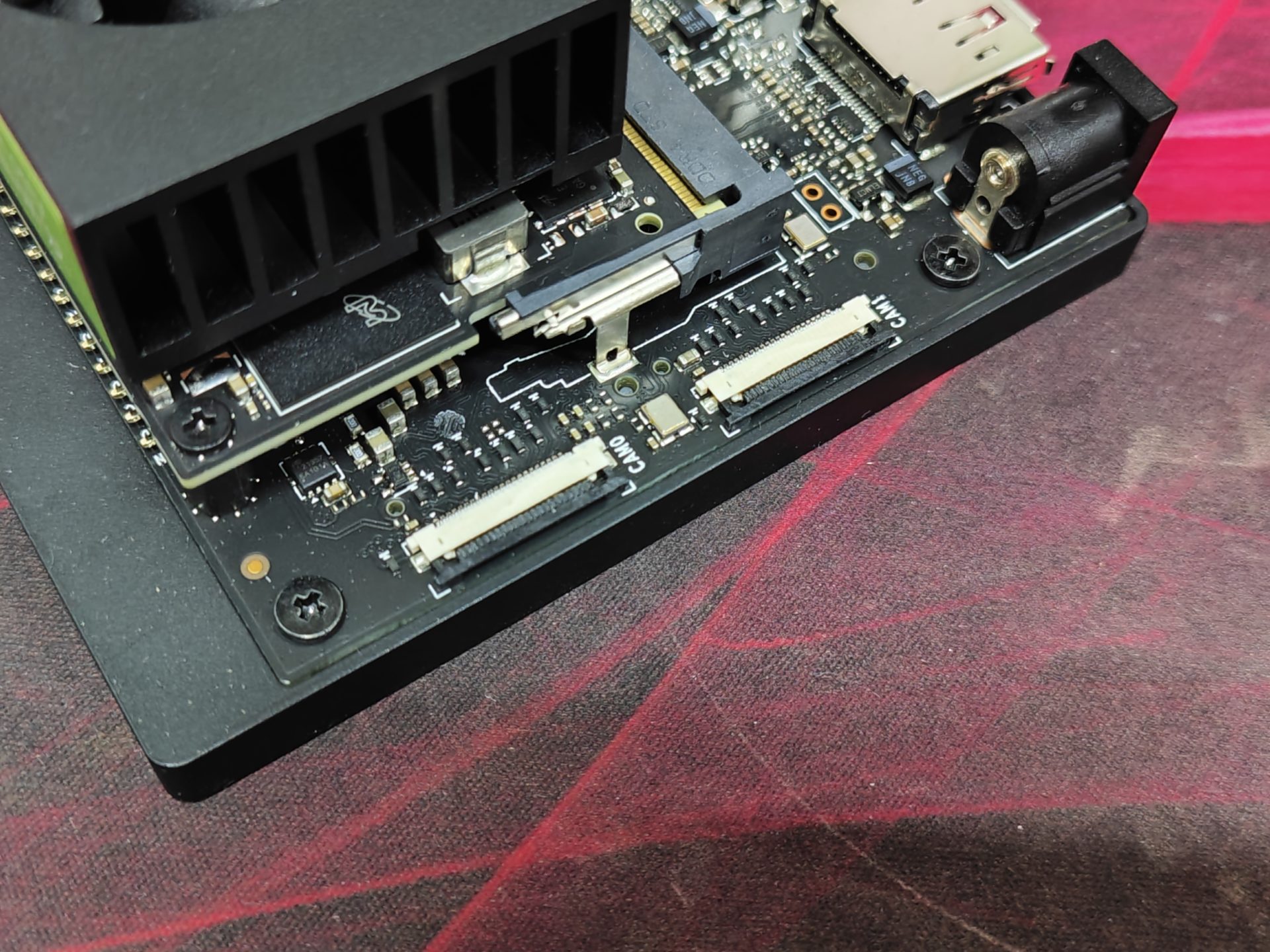

| Storage | external via microSD slot external NVMe via M.2 Key M |

| Power | 7W to 15W |

It’s intriguing to see how the NVIDIA Jetson Orin Nano manages to only run at a maximum of 15W with its specifications. Certainly, putting AI compute capabilities aside, the Orin Nano by itself will be considered a rather decent ARM based PC too.

While some simple AI and compute tasks can be accomplished on the previous generation Jetson Nano, the Jetson Orin Nano changes everything. It is so well equipped that I believe that it should be able to handle any kind of AI application on the edge efficiently. Developers won’t have any complaints about performance anymore, as the Jetson Orin Nano is the answer to their wish for a high performance edge computer.

Carrier Board I/O

| Camera | 2x MIPI CSI-2 22-pin Camera Connectors |

| M.2 Key M | x4 PCIe Gen 3 |

| M.2 Key M | x2 PCIe Gen3 |

| M.2 Key E | PCIe (x1), USB 2.0, UART, I2S, and I2C |

| USB | Type A: 4x USB 3.2 Gen2

Type C: 1x for Debug and Device Mode |

| Networking | 1x Gbe Connector |

| Display | DisplayPort 1.2 (+MST) |

| microSD slot | UHS-1 cards up to SDR104 mode |

| Others | 40-Pin Expansion Header (UART, SPI, I2S, I2C, GPIO)

12-pin button header 4-pin fan header DC power jack |

| Dimensions | 100 mm x 79 mmx 21 mm (Height includes feet, carrier board, module, and thermal solution) |

What’s also interesting is that the NVIDIA Jetson Orin Nano is still able to support a decent number of external peripherals for further expansion of the module’s capabilities. Truly, there are many more things that developers and engineers can build now, which was previously not possible with the Jetson Nano.

Developing on the NVIDIA AI Stack

Many developers rely heavily on NVIDIA technologies for AI development. There is a reason for that.

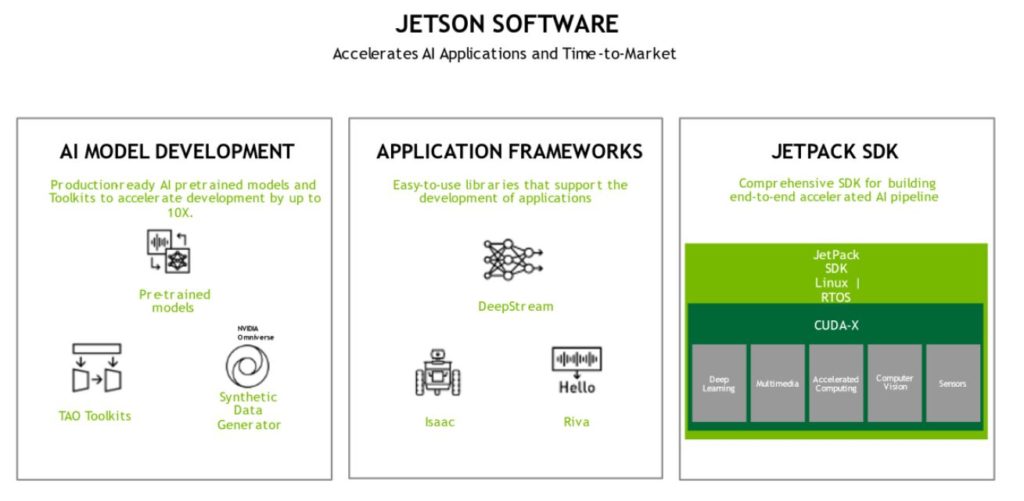

NVIDIA has designed their AI software stack to be used across all of their products. The same stack powers all of NVIDIA’s ecosystem of products, and can run on NVIDIA GPU-based servers, AI workstations, GeForce gaming computers and all of Jetson family of products.

I believe that NVIDIA is the only company that can provide the end-to-end AI software development solution to developers, and no other company comes close to what they have. Furthermore, because of the huge developer base working on and making use of NVIDIA technologies for AI development, there will be many tools, resources and support available – it’s fundamental for every developer working on projects.

The NVIDIA AI software stack accelerates an AI application development journey. For AI model development, there are many pretrained models which can be used off the shelf. The whole NVIDIA Omniverse Replicator system also helps in generating real-world simulated training data for the ease of model creation. Other tools, such as the TAO Toolkit also accelerate training through transfer learning, simplification of model training and optimization of the AI model for specific use cases.

NVIDIA also provides many domain-specific software development kits (SDKs), such as NVIDIA DeepStream, Riva, Isaac and others to aid the development of workflows and applications. Moving to the edge, JetPack SDK also aids in accelerated computing and processing.

Essentially, whatever you will need in developing your AI project, NVIDIA will have you covered. It’s no wonder that AI developers prefer NVIDIA’s solutions for AI development.

Testing the NVIDIA Jetson Orin Nano 8GB

Without much effort, we were able to get started with the OS installation and were able to get the NVIDIA Jetson Orin Nano to boot. All we needed to do was to follow the set up guide available on the NVIDIA website – https://developer.nvidia.com/embedded/learn/get-started-jetson-orin-nano-devkit

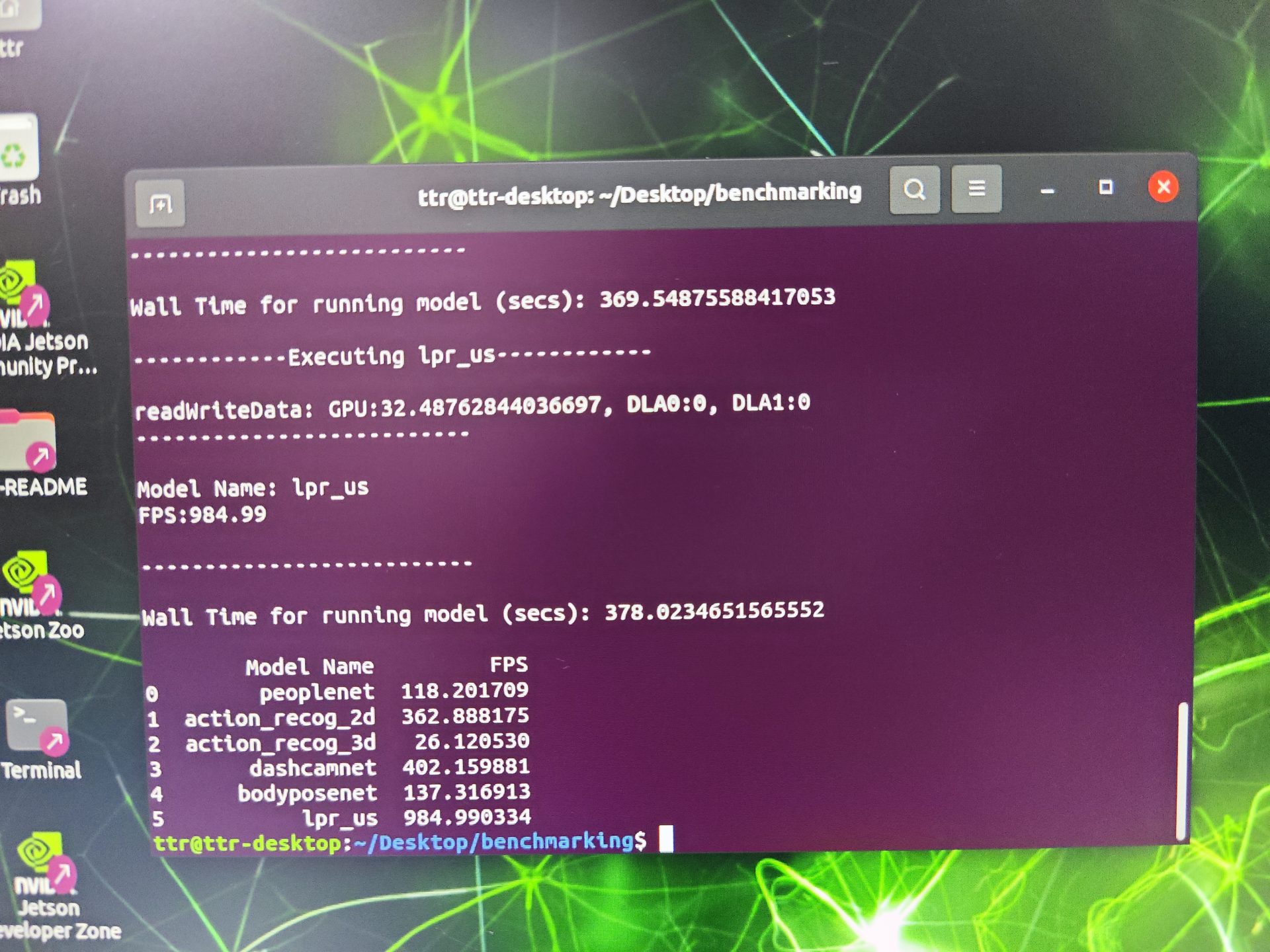

NVIDIA claims that the Jetson Orin Nano runs considerably faster when compared to the Jetson Nano. Of course, we were obliged to put those claims to the test.

True to their words, we were able to achieve similar results when placed side by side to the official results. Indeed, with the updated specifications, the NVIDIA Jetson Orin Nano is a very capable SoM for AI applications.

Transformer Model for People Detection

We also managed to run a demo which runs the PeopleNet D-DETR transformer model. This model detects people. We were able to get the whole setup up and running quickly with Cloud Native installations.

This PeopleNet D-DETR transformer model currently is a FP16 model and runs at 8 FPS on Jetson Orin Nano which is useful for many applications not requiring real time performance. The model runs at around 30 FPS on Jetson AGX Orin. As the research continues on transformer models for Vision, these models will be optimized further for performance at the edge.

Conclusion

Even with its improved performance and extended IO capabilities, NVIDIA was still able to retain the same form factor of the Jetson Orin Nano as its previous generation. It’s simply amazing how AI compute has tremendously moved over the years.

The NVIDIA Jetson Orin Nano has brought about many more opportunities in edge AI computing. It sets a new standard for AI applications on the edge, allowing previously impossible AI features to reality.

Together with a comprehensive AI Software Stack, developers wouldn’t go wrong developing on the NVIDIA Jetson Orin Nano.

However, we’ve got to say that at a price of 499USD*, the NVIDIA Jetson Orin Nano developer kit could be considered costly, and this may affect the resulting cost effectiveness of solutions deployed into the market. Moreover, this pricing could make the development kit out of reach for tinkerers and their own personal projects. Thankfully, as an alternative, the original Jetson Nano dev kit is not going anywhere, and will still be available for purchase from 149USD.

*Note: Qualified students and educators can get the Jetson Orin Nano Developer Kit for $399 (USD). Visit the Jetson for Education page for details (login required).