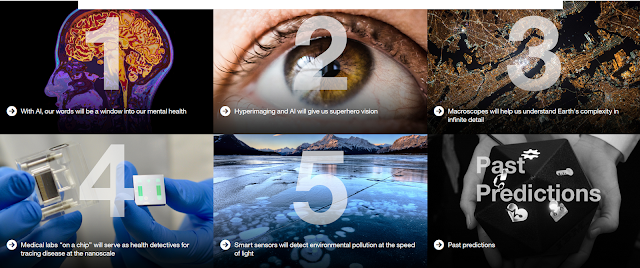

IBM Reveals Five Innovations that will Help Change our Lives within Five Years

Yorktown Heights, N.Y. – 5 January 2017

– IBM (NYSE: IBM)

unveiled today the annual “IBM

5 in 5” (#ibm5in5) – a list of

ground-breaking scientific innovations with the potential to change the

way people work, live, and interact during the next five years.

·

With

AI, our words will open a window into our mental health

·

Hyperimaging

and AI will give us superhero vision

·

Macroscopes

will help us understand Earth’s complexity in infinite detail

·

Medical

labs “on a chip” will serve as health detectives for tracing disease

at the nanoscale

·

Smart

sensors will detect environmental pollution at the speed of light

In 1609, Galileo invented the telescope and

saw our cosmos in an entirely new way. He proved the theory that Earth

and other planets in our solar system revolve around the Sun, which until

then was impossible to observe. IBM

Research continues this work through

the pursuit of new scientific instruments – whether physical devices or

advanced software tools – designed to make what’s invisible in our world

visible, from the macroscopic level down to the nanoscale.

“The scientific community has a wonderful

tradition of creating instruments to help us see the world in entirely

new ways. For example, the microscope helped us see objects too small for

the naked eye and the thermometer helped us understand the temperature

of the Earth and human body,” said Dario Gil, vice president of science

& solutions at IBM Research. “With advances in artificial

intelligence and nanotechnology,

we aim to invent a new generation of scientific instruments that will make

the complex invisible systems in our world today visible over the next

five years.”

Innovation in this area could enable us to

dramatically improve farming, enhance energy efficiency, spot harmful pollution

before it’s too late, and prevent premature physical and mental health

decline as examples. IBM’s global team of scientists and researchers is

steadily bringing these inventions from the realm of our labs to the real

world.

The IBM

5 in 5 is based on market and societal

trends as well as emerging technologies from IBM’s Research labs around

the world that can make these transformations possible. Here are the five

scientific instruments that will make the invisible visible in the next

5 years:

With

AI, our words will open a window into our mental health

Brain disorders, including developmental,

psychiatric and neurodegenerative diseases, represent an enormous disease

burden, in terms of human suffering and economic cost. https://mcgovern.mit.edu/brain-disorders/by-the-numbers

2 Speech

Graphs Provide a Quantitative Measure of Thought Disorder in PsychosisPLoS One, 2012

For example, today, one

in five adults in the U.S. experiences

a mental health condition such as depression, bipolar disease or schizophrenia,

and roughly

half of individuals with severe

psychiatric disorders receive no treatment. The global

cost of mental health conditionsis projected to surge to US$6 trillion by 2030.

If the brain is a black box that we don’t

fully understand, then speech is a key to unlock it. In five years, what

we say and write will be used as indicators of our mental health and physical

wellbeing. Patterns in our speech and writing analyzed by new cognitive

systems will provide tell-tale signs of early-stage developmental disorders,

mental illness and degenerative neurological diseases that can help doctors

and patients better predict, monitor and track these conditions.

At IBM, scientists are using transcripts

and audio inputs from psychiatric interviews, coupled with machine learning

techniques, to find patterns in speech to help clinicians accurately predict

and monitor psychosis, schizophrenia, mania and depression. Today, it only

takes about 300 words to help clinicians predict the probability of psychosis

in a user.2

In the future, similar techniques could be

used to help patients with Parkinson’s, Alzheimer’s, Huntington’s disease,

PTSD and even neurodevelopmental conditions such as autism and ADHD. Cognitive

computers can analyze a patient’s speech or written words to look for

tell-tale indicators found in language, including meaning, syntax and intonation.

Combining the results of these measurements with those from wearable devices

and imaging systems and collected in a secure network can paint a more

complete picture of the individual for health professionals to better identify,

understand and treat the underlying disease.

What were once invisible signs will become

clear signals of patients’ likelihood of entering a certain mental state

or how well their treatment plan is working, complementing regular clinical

visits with daily assessments from the comfort of their homes.

Hyperimaging

and AI will give us superhero vision

More than 99.9 percent of the electromagnetic

spectrum cannot be observed by the naked eye. Over the last 100 years,

scientists have built instruments that can emit and sense energy at different

wavelengths. Today, we rely on some of these to take medical images of

our body, see the cavity inside our tooth, check our bags at the airport,

or land a plane in fog. However, these instruments are incredibly specialized

and expensive and only see across specific portions of the electromagnetic

spectrum.

In five years, new imaging devices using

hyperimaging technology and AI will help us see broadly beyond the domain

of visible light by combining multiple bands of the electromagnetic spectrum

to reveal valuable insights or potential dangers that would otherwise be

unknown or hidden from view. Most importantly, these devices will be portable,

affordable and accessible, so superhero vision can be part of our everyday

experiences.

A view of the invisible or vaguely visible

physical phenomena all around us could help make road and traffic conditions

clearer for drivers and self-driving cars. For example, using millimeter

wave imaging, a camera and other sensors, hyperimaging technology could

help a car see through fog or rain, detect hazardous and hard-to-see road

conditions such as black ice, or tell us if there is some object up ahead

and its distance and size. Cognitive computing technologies will reason

about this data and recognize what might be a tipped over garbage can versus

a deer crossing the road, or a pot hole that could result in a flat tire.

Embedded in our phones, these same technologies

could take images of our food to show its nutritional value or whether

it’s safe to eat. A hyperimage of a pharmaceutical drug or a bank check

could tell us what’s fraudulent and what’s not. What was once beyond

human perception will come into view.

IBM scientists are today building a compact

hyperimaging platform that “sees” across separate portions of the electromagnetic

spectrum in one platform to potentially enable a host of practical and

affordable devices and applications.

Macroscopes

will help us understand Earth’s complexity in infinite detail

Today, the physical world only gives us a

glimpse into our interconnected and complex ecosystem. We collect exabytes

of data – but most of it is unorganized. In fact, an estimated

80 percent of a data scientist’s

time is spent scrubbing data instead of analyzing and understanding what

that data is trying to tell us.

Thanks to the Internet

of Things, new sources of data

are pouring in from millions of connected objects — from refrigerators,

light bulbs and your heart rate monitor to remote sensors such as drones,

cameras, weather stations, satellites and telescope arrays. There are already

more than six

billion connected devices generating

tens of exabytes of data per month, with a growth rate of more than 30

percent per year. After successfully digitizing information, business transactions

and social interactions, we are now in the process of digitizing the physical

world.

In five years, we will use machine learning

algorithms and software to help us organize the information about the physical

world to help bring the vast and complex data gathered by billions of devices

within the range of our vision and understanding. We call this a “macroscope”

– but unlike the microscope to see the very small, or the telescope that

can see far away, it is a system of software and algorithms to bring all

of Earth’s complex data together to analyze it for meaning.

By aggregating, organizing and analyzing

data on climate, soil conditions, water levels and their relationship to

irrigation practices, for example, a new generation of farmers will have

insights that help them determine the right crop choices, where to plant

them and how to produce optimal yields while conserving precious water

supplies.

In 2012, IBM Research began investigating

this concept at Gallo Winery, integrating irrigation, soil and weather

data with satellite images and other sensor data to predict the specific

irrigation needed to produce an optimal grape yield and quality. In the

future, macroscope technologies will help us scale this concept to anywhere

in the world.

Beyond our own planet, macroscope technologies could handle, for example,

the complicated indexing and correlation of various layers and volumes

of data collected by telescopes to predict asteroid collisions with one

another and learn more about their composition.

Medical

labs “on a chip” will serve as health detectives for tracing disease

at the nanoscale

Early detection of disease is crucial. In

most cases, the earlier the disease is diagnosed, the more likely it is

to be cured or well controlled. However, diseases like cancer can be hard

to detect – hiding in our bodies before symptoms appear. Information about

the state of our health can be extracted from tiny bio particles in bodily

fluids such as saliva, tears, blood, urine and sweat. Existing scientific

techniques face challenges for capturing and analyzing these bio particles,

which are thousands of times smaller than the diameter of a strand of human

hair.

In the next five years, new medical labs

“on a chip” will serve as nanotechnology health detectives – tracing

invisible clues in our bodily fluids and letting us know immediately if

we have reason to see a doctor. The goal is to shrink down to a single

silicon chip all of the processes necessary to analyze a disease that would

normally be carried out in a full-scale biochemistry lab.

The lab-on-a-chip technology could ultimately

be packaged in a convenient handheld device to allow people to quickly

and regularly measure the presence of biomarkers found in small amounts

of bodily fluids, sending this information securely streaming into the

cloud from the convenience of their home. There it could be combined with

real-time health data from other IoT-enabled devices, like sleep monitors

and smart watches, and analyzed by AI systems for insights. When taken

together, this data set will give us an in depth view of our health and

alert us to the first signs of trouble, helping to stop disease before

it progresses.

At IBM Research, scientists are developing

lab-on-a-chip nanotechnology that can separate and isolate bioparticles

down to 20 nanometers in diameter, a scale that gives access to DNA, viruses,

and exosomes. These particles could be analyzed to potentially reveal the

presence of disease even before we have symptoms.

Smart

sensors will detect environmental pollution at the speed of light

Most pollutants are invisible to the human eye, until their effects make

them impossible to ignore. Methane, for example, is the primary component

of natural gas, commonly considered a clean energy source. But if methane

leaks into the air before being used, it can warm the Earth’s atmosphere.

Methane is estimated to be the

second largest contributor to global

warming after carbon dioxide (CO2).

In the United States, emissions from oil and gas systems are the largest

industrial source of methane gas in the atmosphere. The U.S. Environmental

Protection Agency (EPA) estimatesthat more than nine million metric tons of methane leaked from natural

gas systems in 2014. Measured as CO2-equivalent over 100 years, that’s

more greenhouse gases than were emitted by all U.S. iron and steel, cement

and aluminum manufacturing facilities combined.

In five years, new, affordable sensing technologies deployed near natural

gas extraction wells, around storage facilities, and along distribution

pipelines will enable the industry to pinpoint invisible leaks in real-time.

Networks of IoT sensors wirelessly connected to the cloud will provide

continuous monitoring of the vast natural gas infrastructure, allowing

leaks to be found in a matter of minutes instead of weeks, reducing pollution

and waste and the likelihood of catastrophic events.

Scientists at IBM are tackling this vision, working with natural gas producers

such as Southwestern Energy to explore the development of an intelligent

methane monitoring system and as part of the ARPA-E

Methane Observation Networks with Innovative Technology to Obtain Reductions

(MONITOR) program.

At the heart of IBM’s research is silicon

photonics, an evolving technology that transfers data by light, allowing

computing literally at the speed of light. These chips could be embedded

in a network of sensors on the ground or within infrastructure, or even

fly on autonomous drones; generating insights that, when combined with

real-time wind data, satellite data, and other historical sources, can

be used to build complex environmental models to detect the origin and

quantity of pollutants as they occur.

For more information about the IBM 5 in 5,

visit http://ibm.biz/five-in-five.

For the LATEST tech updates,

FOLLOW us on our Twitter

LIKE us on our FaceBook

SUBSCRIBE to us on our YouTube Channel!