NVIDIA has quietly rolled out a notable memory optimization in its latest DLSS update, trimming VRAM usage by up to 20% in transformer-based upscaling models.

As reported by VideoCardz, the change comes with the release of DLSS SDK version 3.10.3.0, which also marks the official debut of DLSS 4 after a prolonged beta phase.

As reported by VideoCardz, the change comes with the release of DLSS SDK version 3.10.3.0, which also marks the official debut of DLSS 4 after a prolonged beta phase.

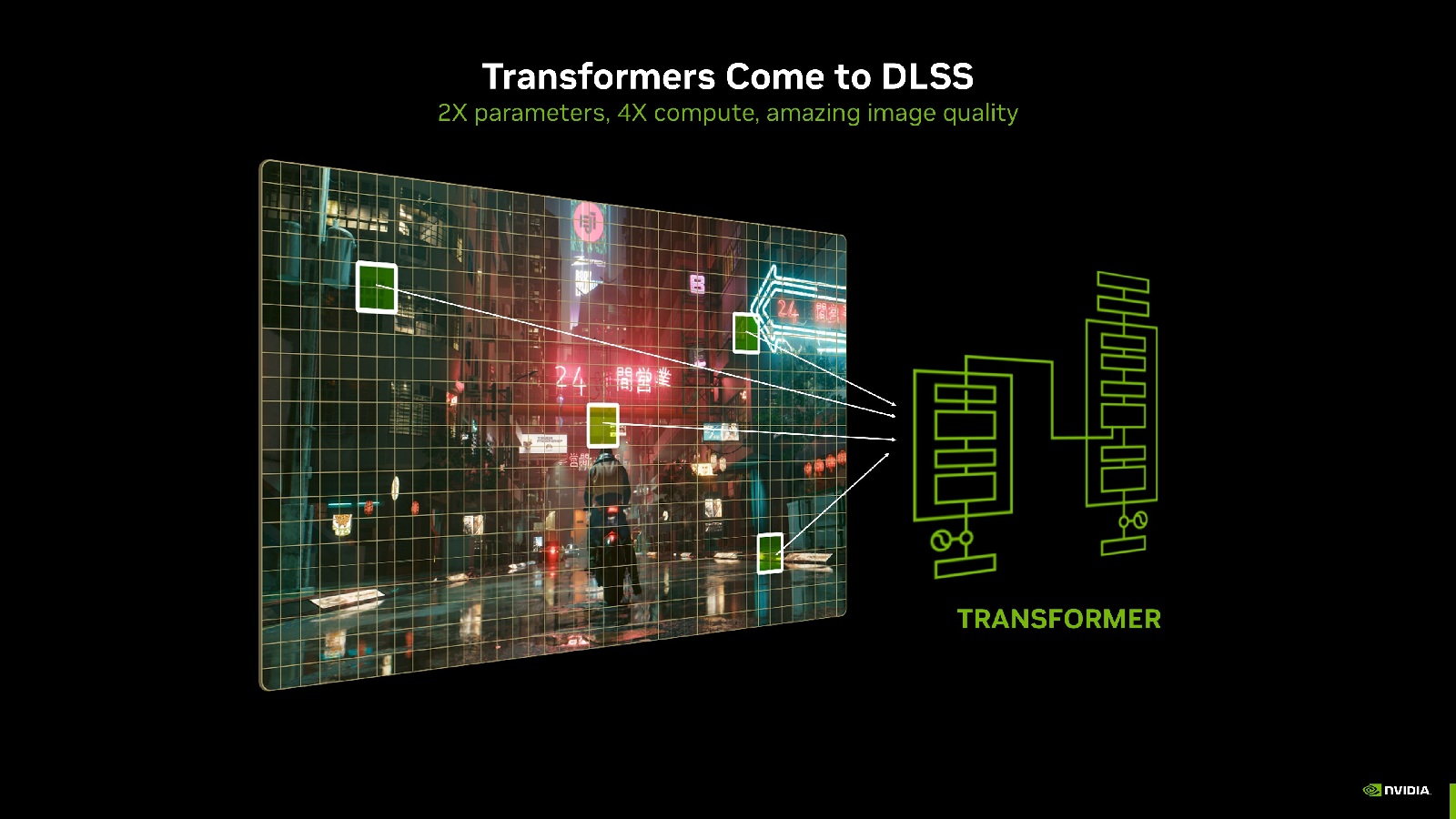

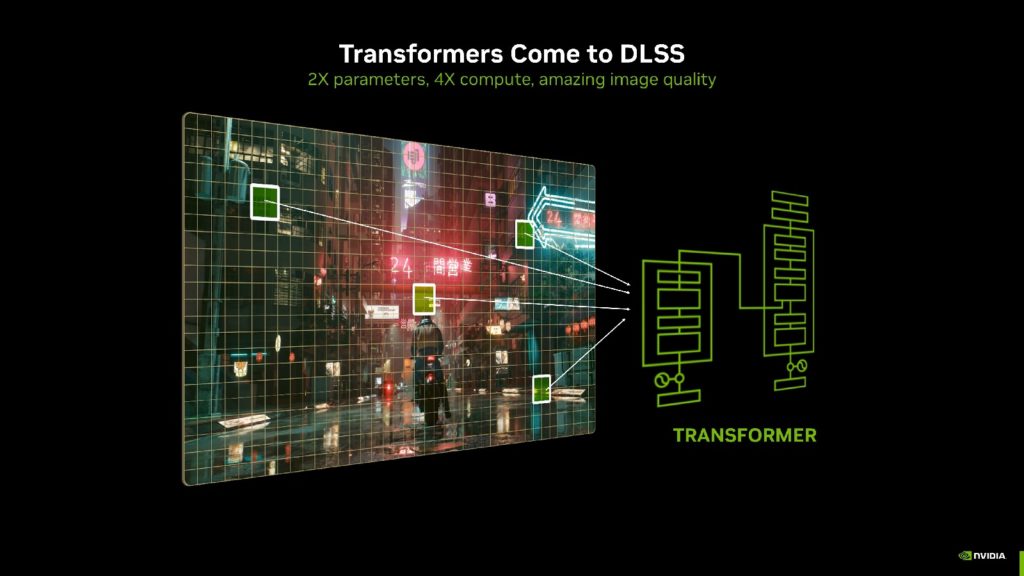

The update targets the relatively high memory footprint of the transformer architecture used in DLSS 3 and 4. Historically, these models consumed nearly twice the VRAM of the older CNN-based implementations, posing a performance strain on GPUs with tighter memory limits, especially the much-discussed 8GB cards. The new optimization reportedly brings transformer models closer in efficiency to their CNN predecessors, which could provide welcome relief as the patch is deployed through updated drivers and supported games.

Still, the actual benefit depends heavily on resolution, with memory savings at 4K resolution hovering around 80MB, which is a modest improvement that scales linearly at lower resolutions and becomes more noticeable at extreme settings like 8K. Yet even there, a 300MB saving might be trivial if you’re running a 32GB GPU.

It’s also important to clarify that this optimization only affects DLSS upscaling with NVIDIA separately reduced VRAM usage by 30% in frame generation with DLSS 4, but that’s a different part of the technology stack.

Ultimately, while the VRAM cut might seem incremental, it reflects the evolving nature of NVIDIA’s AI-driven graphics tech. The transformer-based DLSS models are still relatively new, and improvements like these signal a path toward leaner and more efficient implementations, and possibly with more gains on the horizon.