This year’s NVIDIA SIGGRAPH graphics conference will take place in Denver, Colorado, on July 29. Team Green, along with leading industry partners, will be showcasing new graphics technology for the first time. In this post, we’ll summarize the highlights of what will be presented.

Expanded Capabilities in Diffusion Models

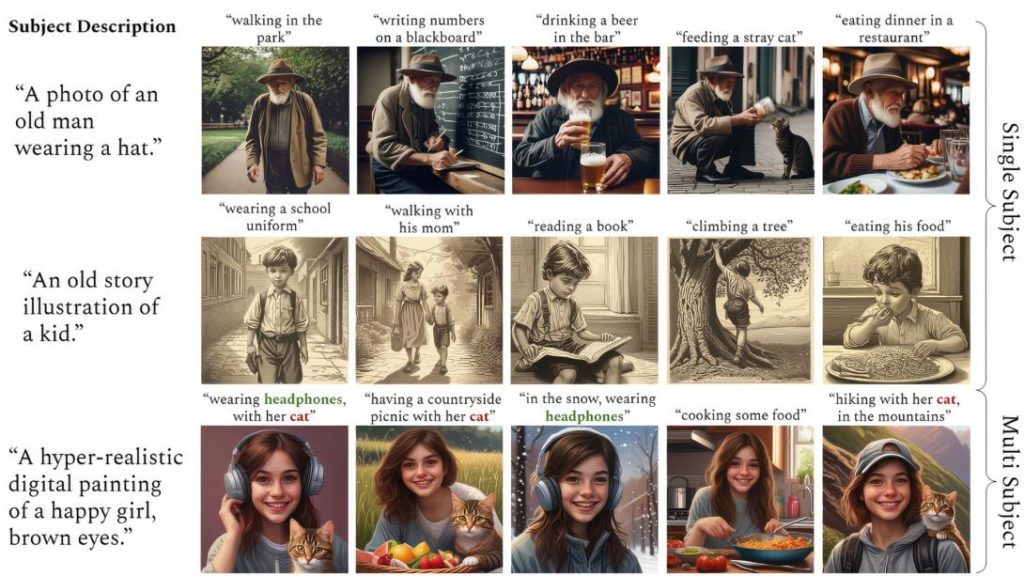

Diffusion models, which transform text prompts into images, have been improving steadily. A new NVIDIA-authored paper introduces ConsiStory, a collaboration between internal researchers and Tel Aviv University, which enhances the consistency and quality of generating multiple images of the same character, a valuable tool for storyboard development. The new ‘Subject-Driven Shared Attention’ technique has significantly reduced the time required to produce a collection of consistent images from 13 minutes to just around 30 seconds.

Diffusion models, which transform text prompts into images, have been improving steadily. A new NVIDIA-authored paper introduces ConsiStory, a collaboration between internal researchers and Tel Aviv University, which enhances the consistency and quality of generating multiple images of the same character, a valuable tool for storyboard development. The new ‘Subject-Driven Shared Attention’ technique has significantly reduced the time required to produce a collection of consistent images from 13 minutes to just around 30 seconds.

Another new AI model applies 2D generative diffusion models to interactive texture painting on 3D meshes based on reference images. This turns last year’s “text to custom texture” static model into an active one that reflects changes in real-time.

Development Kickstarting in Physics-Based Simulation

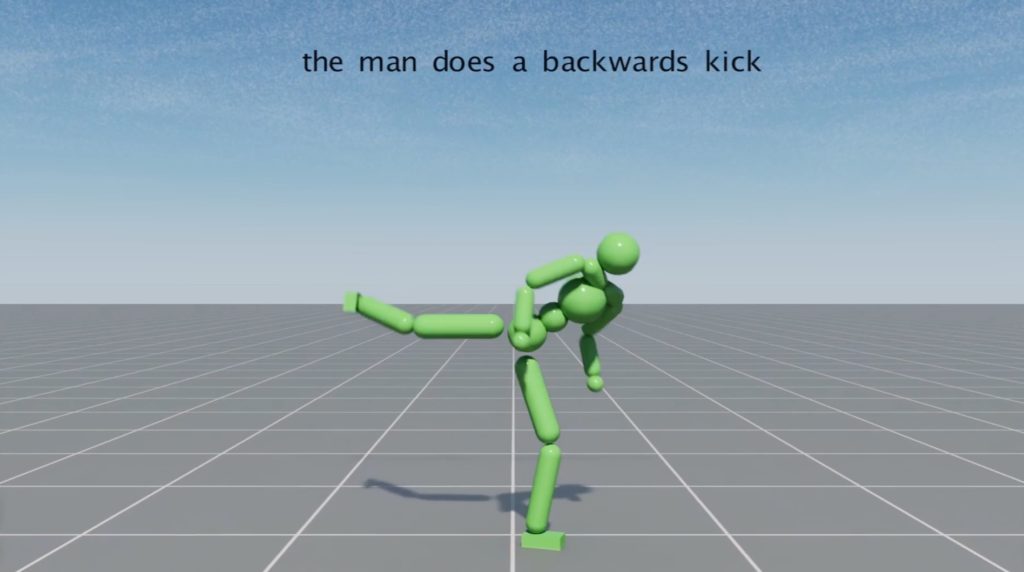

NVIDIA remains a leader in physics-based simulation and is presenting several new research papers revealing breakthroughs in the field. One of these, SuperPADL, generates complex human motions from text prompts using reinforced and supervised learning. The prototype model can demonstrate over 5,000 skills and is scalable to run on consumer-class NVIDIA GPUs.

NVIDIA remains a leader in physics-based simulation and is presenting several new research papers revealing breakthroughs in the field. One of these, SuperPADL, generates complex human motions from text prompts using reinforced and supervised learning. The prototype model can demonstrate over 5,000 skills and is scalable to run on consumer-class NVIDIA GPUs.

Another paper proposes a new neural physics method that allows AI to learn the movement of a wide variety of objects from 3D mesh, NeRF, or a solid block generated by text-to-3D models, in a given environment. Additionally, a new renderer capable of thermal analysis, electrostatics, and fluid mechanics will be showcased, promising significant utility in engineering.

Diffraction Simulation and Rendering Realism at the Next Level

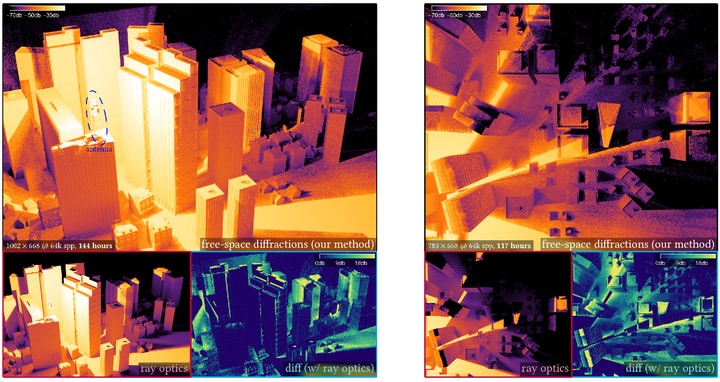

Several NVIDIA-authored papers focus on improvements in visible light modeling, achieving up to 25x faster performance, while diffraction can achieve a 1000x uplift. These advancements enable the integration of free-space diffraction into path-tracing workflows and simulate lights with longer wavelengths, such as radars, sounds, and radiowaves.

Several NVIDIA-authored papers focus on improvements in visible light modeling, achieving up to 25x faster performance, while diffraction can achieve a 1000x uplift. These advancements enable the integration of free-space diffraction into path-tracing workflows and simulate lights with longer wavelengths, such as radars, sounds, and radiowaves.

A specific path-tracing technique, developed in collaboration with the University of Utah, reuses calculated paths to increase effect sample count by up to 25x, enhancing image quality and reducing visual artifacts.

A specific path-tracing technique, developed in collaboration with the University of Utah, reuses calculated paths to increase effect sample count by up to 25x, enhancing image quality and reducing visual artifacts.

Teaching AIs to Think in 3D

Perhaps the most intriguing development, NVIDIA researchers have found a way to incorporate 3D representation and designs into a multipurpose AI tool. One paper introduces fVDB, a GPU-optimized framework for 3D deep learning, providing large spatial scale and high-resolution 3D models, NeRFs, and the segmentation and reconstruction of large-scale point clouds.

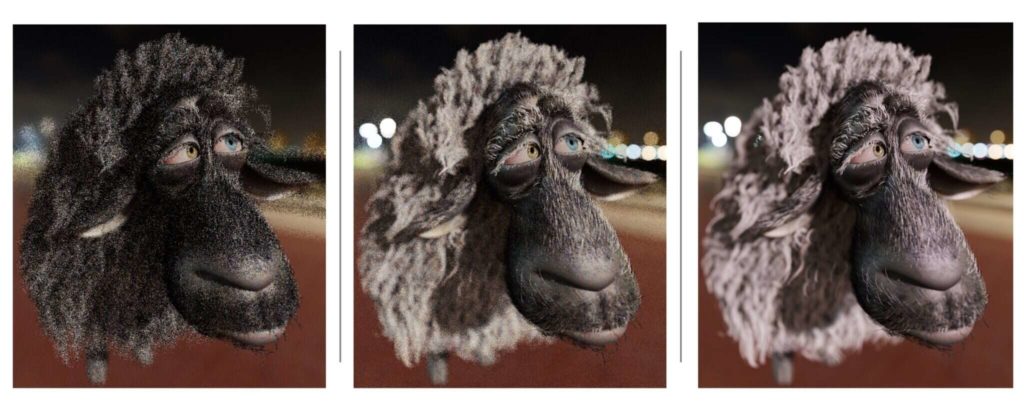

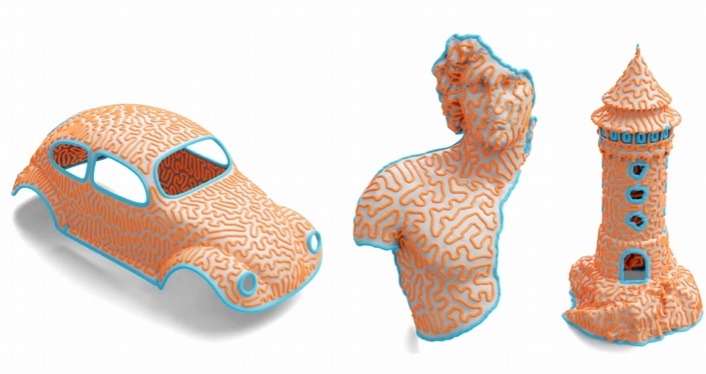

Another award-winning paper, written with Dartmouth College researchers, explores how 3D objects interact with light, while a collaboration with the University of Tokyo, the University of Toronto, and Adobe Research presents an algorithm that generates smooth, space-filling curves on 3D meshes in real-time, drastically reducing labor-intensive processes to mere seconds.

Another award-winning paper, written with Dartmouth College researchers, explores how 3D objects interact with light, while a collaboration with the University of Tokyo, the University of Toronto, and Adobe Research presents an algorithm that generates smooth, space-filling curves on 3D meshes in real-time, drastically reducing labor-intensive processes to mere seconds.

More at NVIDIA SIGGRAPH

In addition to the tech demonstrations, the conference will feature sessions such as a fireside chat between NVIDIA CEO Jensen Huang and Meta founder and CEO Mark Zuckerberg, a conversation with Huang and WIRED’s senior writer Lauren Goode, and OpenUSD Day, dedicated to the industry adoption and evolution of OpenUSD to build AI-enabled 3D pipelines.