In addition to the fresh news about Omniverse and OpenUSD, NVIDIA, as anticipated, has unveiled an array of AI-focused updates spanning both hardware and software domains. Let’s delve into the details.

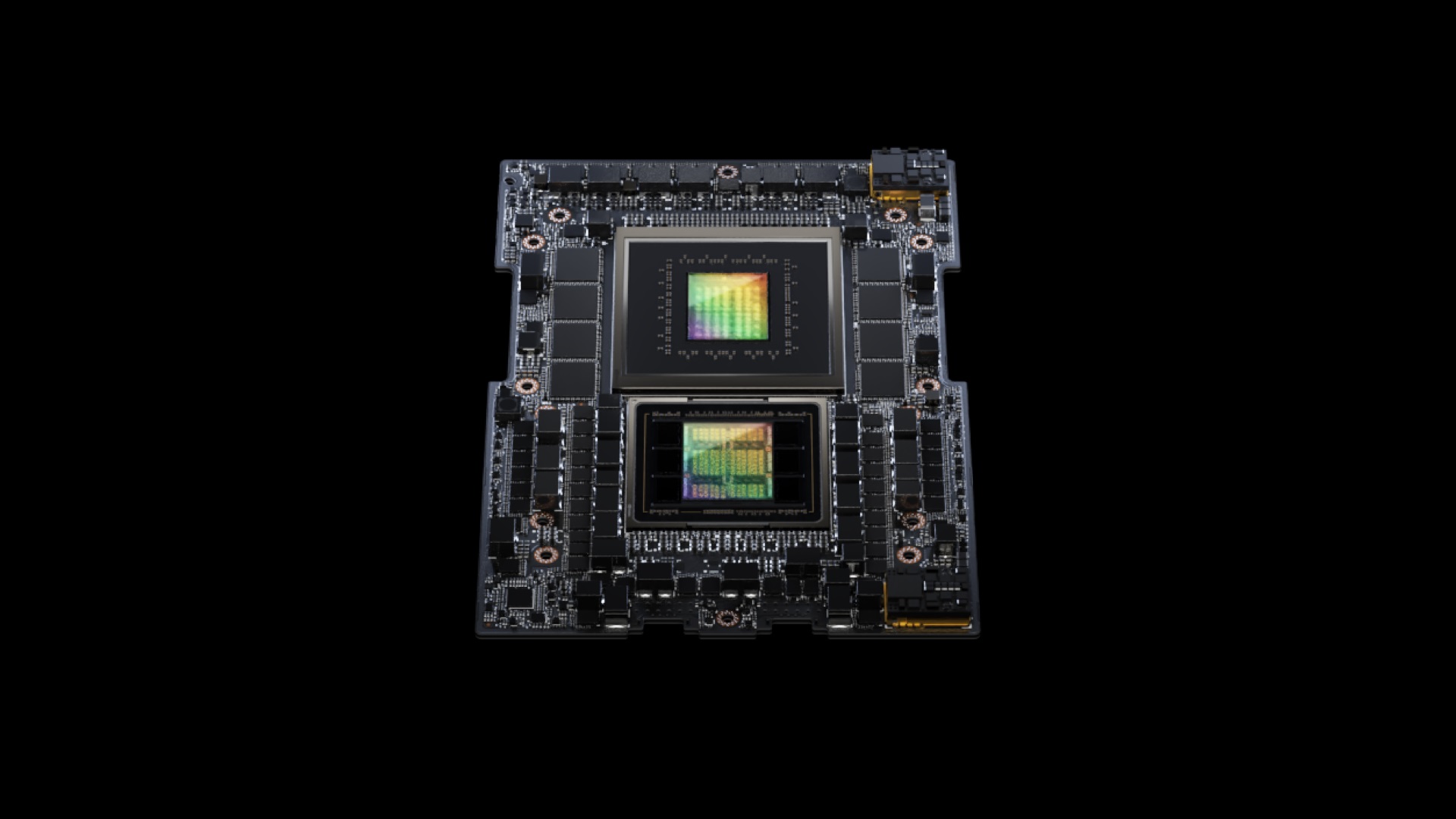

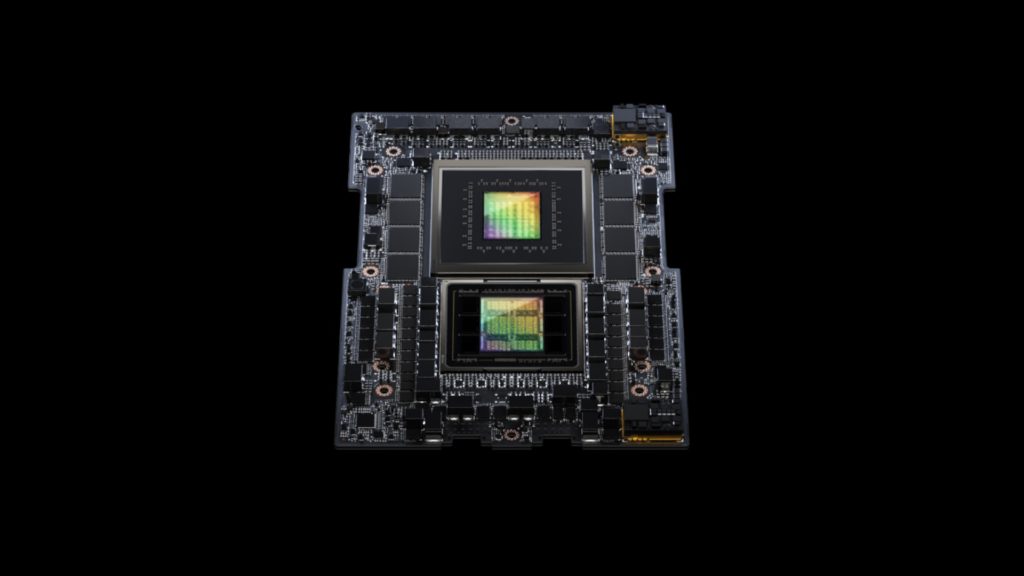

The most significant announcement centers around the debut of the GH200 Grace Hopper Superchip, hailed as the world’s pioneering HBM3e processor tailored exclusively for generative AI and accelerated computing requirements.

The most significant announcement centers around the debut of the GH200 Grace Hopper Superchip, hailed as the world’s pioneering HBM3e processor tailored exclusively for generative AI and accelerated computing requirements.

This processor stands out as the optimal solution for addressing advanced workloads ranging from LLMs to recommender systems and vector databases. The recommended configuration, a dual-chip setup, boasts 3.5 times more memory capacity and 3 times more bandwidth compared to current-generation offerings.

Designed to establish seamless GPU connections and deliver uncompromising performance, the renowned NVLink stands strong to provide full access to the 1.2TB memory available in the system.

Regarding HBM3e, it essentially functions an enhanced iteration of HBM3, delivering an impressive bandwidth of 10TBps.

Anticipated fulfillment of systems equipped with the GH200 is set to commence in Q2 2024.

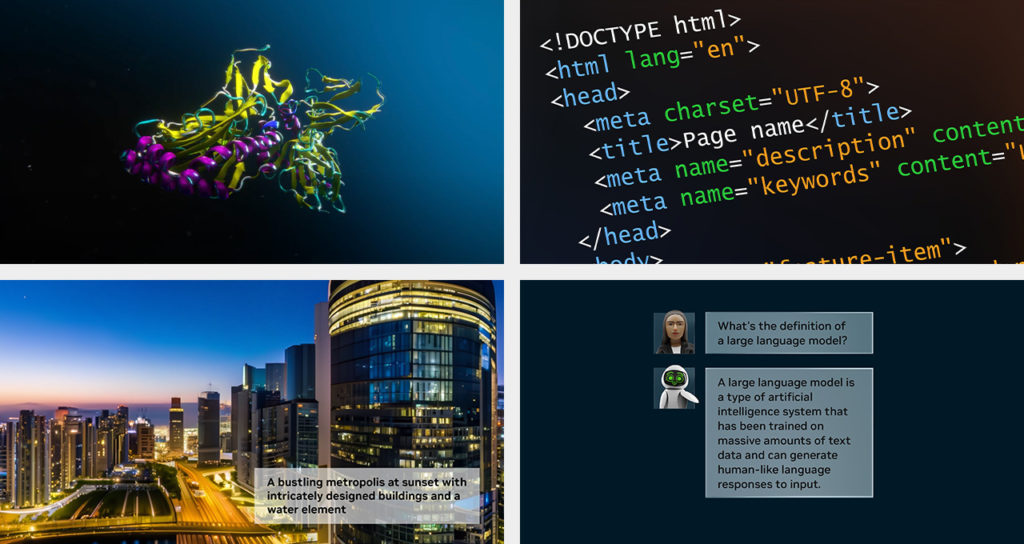

Shifting our focus to software, a new platform has emerged – the NVIDIA AI Workbench. Tailored to developers in need of swiftly crafting, testing, and refining various generative AI models on PCs or workstations, it helps them in the process of deployments in data centers or cloud environments.

The primary aim of the AI Workbench is to simplify complexities by offering pre-built models from Hugging Face, GitHub, and NVIDIA NGC, serving as an excellent starting point for development. Users operating Windows or Linux-powered systems with RTX cards can conduct testing on local machines and simultaneously assess resource requirements for scaling in the cloud.

The AI Enterprise 4.0 platform consists of the NVIDIA NeMo, NVIDIA Triton Management Service, and NVIDIA Base Command Manager Essentials provide everything needed to set up an AI model training environment.

Prominent software entities like ServiceNow, Snowflake, Dell, Google Cloud, Microsoft Azure, and others are integral parts of this ecosystem, presenting diverse solutions to cater to specific needs.

Lastly, the extensive AI community, Hugging Face, has forged a partnership with NVIDIA to democratize generative AI technology at the supercomputing level. Users of the Hugging Face platform may gain access to NVIDIA DGX Cloud for robust processing capabilities to develop transformative models. This collaboration can elevate applications across various industries by tapping into over 250,000 models and 50,000 datasets.

The collaboration also entails Hugging Face introducing the Training Cluster as a Service (TCaaS) in the upcoming months. This feature empowers businesses to directly train their own generative AI models in the cloud.

Speaking of the cloud, the NVIDIA DGX Cloud enables instances to host 8x H100 or A100 80GB Tensor Core GPUs, aggregating to a total of 640GB memory. Let’s not forget the backbone of all the interconnecting systems – NVIDIA Networking – offering that sweet low-latency fabric facilitating scalable workloads across clusters of systems.