At the COMPUTEX 2022 Forum, NVIDIA’s Richard Kerris, Vice President of the Omniverse developer platform, sheds light on the enormous opportunities simulation brings to 3D workflows and the next evolution of AI.

Kerris talks about all things virtual – from the Omniverse to 3D workflows, digital twins, and more, to offer a glimpse into how enterprises can get a headstart with the Omniverse platform.

Omniverse as the Connector of Virtual Worlds

Kerris likened the Metaverse to a network and further explained that this network, which will be three-dimensional, will represent the next generation of the web.

Individuals will be able to traverse virtual worlds seamlessly, unlocking novel ways of doing things. Virtual worlds, according to Kerris, are essential for the next era of Artificial Intelligence (AI).

“Here at NVIDIA, we believe virtual worlds are essential for the next era of AI. They’ll be used for things like training robots and autonomous vehicles, digital twins where you can monitor and manage a factory,” Kerris shared.

Within this landscape, NVIDIA’s Omniverse serves as a connector of virtual worlds. The platform, which enables real-time 3D simulation, design collaboration, and the creation of virtual worlds, aids professionals from various industries while offering them a head start.

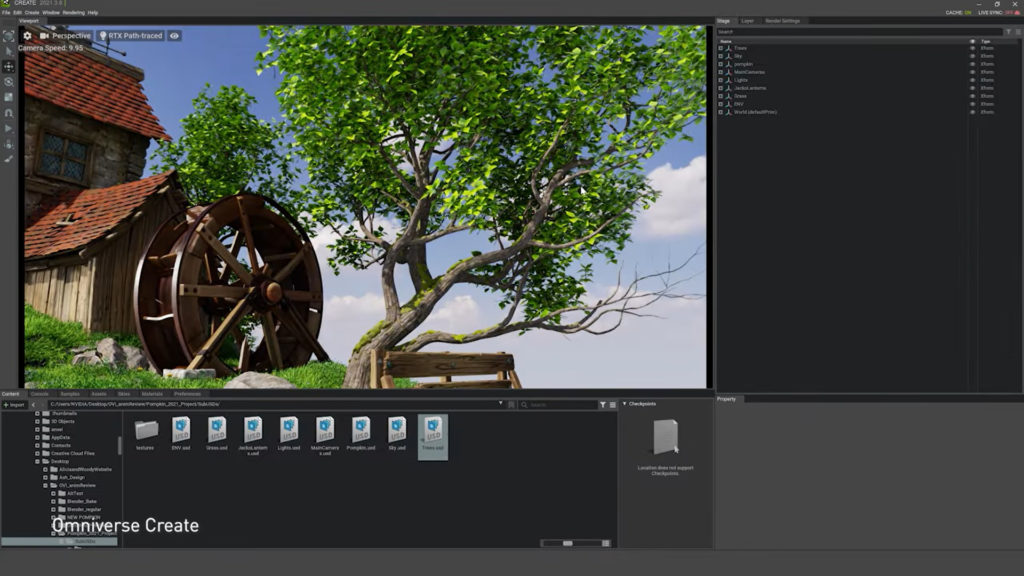

Omniverse Create: Optimizing 3D Workflows in a Hybrid Workforce

Kerris singled out artists, designers, engineers, and visualization experts, to explain how 3D workflows require teams to hold an arsenal of an extremely specific and wide range of skills. Each skill and expertise requires its own system set up from laptop to data center, physical or virtualized, and each discipline requires its own software applications.

Yet, because of the vast amount of software and tools available on the market, these professionals often have to deal with a slew of tools, many of which are incompatible.

“Every major design software company has its own independent planetary system of add-ons, adjacent products, and customizations, and they are often incompatible. This leads to workflows plagued with tedious import and export often causing model decimation, mistakes, and time lost. This has often forced customers to unwillingly adopt integrated products from the same vendors which can limit productivity, creativity, and the ability of teams,” Kerris lamented.

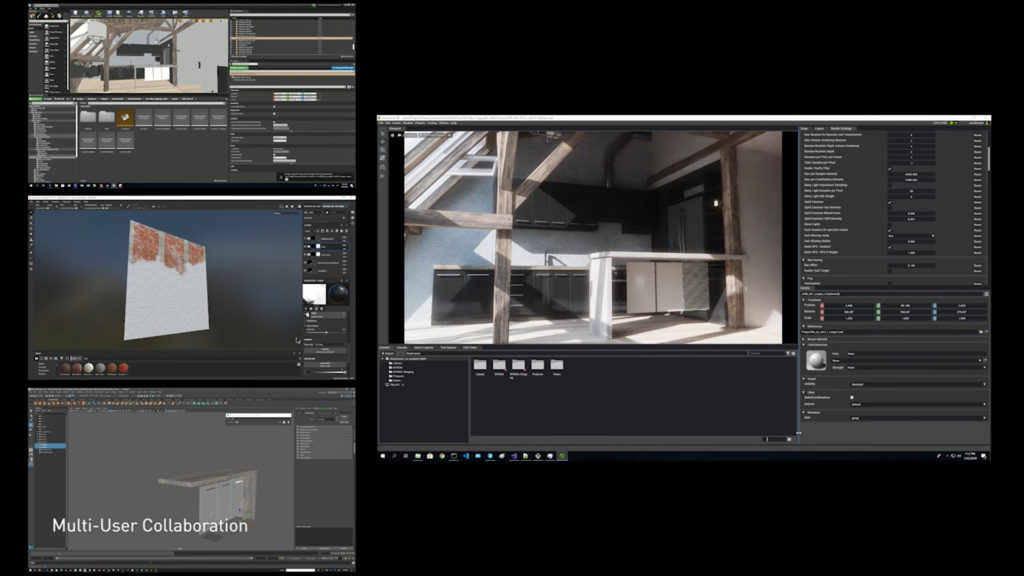

In connecting and accelerating their 3D workflows, the Omniverse platform, Kerris shared, allows artists to connect their apps into a single, shared scene, and compose a scene by themselves or in collaboration with other artists from across the globe. During collaboration, changes made by one designer will be reflected back to the other artists.

Omniverse thus offers users a head start – especially in today’s context, where artists, designers, engineers, and researchers integrate technologies like global illumination, real-time rate tracing, AI, compute, and engineering simulation into their daily workflow.

Kerris went on to include users from the Mechanical and Electrical systems (M&E) industry and the Architectural Engineering and Construction (AEC) industry.

“In M&E, the growing expectation for high-quality content means that firms are spending upwards of six and a half billion compute hours per year rendering content. In AEC, advanced technologies in perfectly physical, accurate visualizations are quickly becoming crucial to an industry that is expected to reach 12.9 trillion dollars in the next year,” Kerris explained.

Taking things one step further, Kerris described the Omniverse as a two-part journey. On the first part of the journey, users can create content, or virtual worlds, using tools that can be connected live to the platform of Omniverse for collaboration with creators and designers across the globe.

The second part of the journey sees the operation of those things that were created in a digital twin scenario. Users can manage their creations in real-time and understand what’s taking place in the synthetic world and how it relates to the physical world.

“Omniverse can be thought of as a network of networks, and what we mean by that is you can connect existing applications that you’re using today to take advantage of all the things the platform has to offer, you can extend the platform to customize it to meet your needs, and you can even build applications on top of the platform,” said Kerris.

This, Kerris said, is the future of 3D content creation and how virtual worlds will be built.

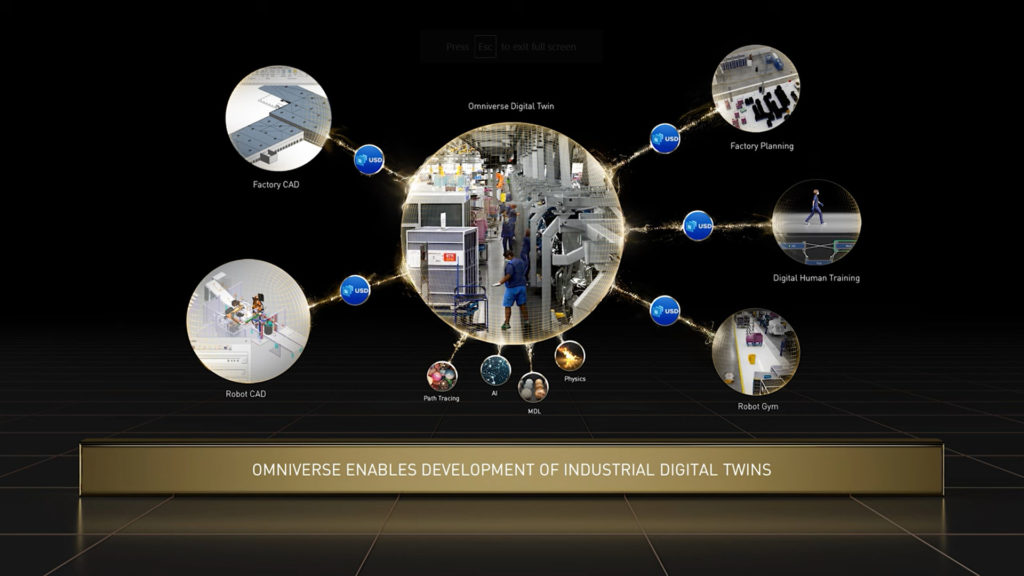

Omniverse Enterprise: Building and Managing Digital Twins

In addition to Omniverse for creators which allows the connection of existing applications to a single platform, Kerris introduced Omniverse for enterprises for companies who wish to leverage the platform for design collaboration and building and managing digital twins.

“All types of industries will use digital twins to understand how they’re manufacturing something, how the operation of that device will take place afterward, and all kinds of scenarios that they wouldn’t have been able to imagine before. There are many steps to building a digital twin, and today Omniverse Enterprise customers can start with the building blocks,” Kerris said.

With Omniverse, enterprises can leverage the platform to generate synthetic data that is indistinguishable from reality, reducing training time and increasing the AI system’s accuracy in the real world.

“Customers like Amazon Robotics and BMW are using Omniverse to build digital twins of their warehouses and factories. Companies like DNEG are using Omniverse to do pre-visualization for the work that they’re doing in the media and entertainment space,” shared Kerris.

Kerris also shared about Foster and Partners and KPF, who are using Omniverse to build full-fidelity, physically accurate simulations of the buildings and environments that their architectural work is being done in. Similarly, Ericsson took advantage of the Omniverse platform to build a digital twin city to understand how they are going to propagate 5G antennas across the environment.

Not just whole cities, according to Kerris, will be done as a digital twin – NVIDIA and its partners are currently working on Earth-2, a digital twin of the entire earth.

A glimpse into what to expect from the Omniverse platform

Once in Omniverse, users can draw on NVIDIA’s superpowers like Physics, which lets artists use true-to-life simulations that obey the laws of physics and RTX Render to see their scene rendered in real-time, fully RTX ray traced or path traced.

Kerris explained that Physics helps users understand how things are going to behave in the digital world by using things like water, smoke, and fire, and adding them into the workflows that are currently in use.

Siemens, for example, used the power of Omniverse to quickly perform physics-based super-resolution simulations on wind farms that accurately reflect real-world performance using NVIDIA Modulus, Physics ML Models. Optimization resulted in the provision of power to supply up to 20,000 additional homes and at a 10% lower cost.

The Accessibility of Omniverse

Omniverse is built to run on RTX – it can run on a laptop, a workstation, or in a data center and beyond. Emphasizing accessibility, Kerris shared that NVIDIA recently announced OVX, a computing system dedicated to Omniverse that can start with a workstation and scale all the way up to a superpod, handling enormous amounts of data to have true-to-reality simulation at any scale.

“Omniverse is a platform that serves developers, that serves creators and designers, and even enterprises. For developers, it’s free to develop on and free to deploy anything that you create for Omniverse whether you’re connecting with an extension or connecting to an existing app or modifying the application or even building applications on the platform. Right out of the box, users can enhance and extend their existing workflows without the need to rebuild their entire existing pipeline,” Kerris said.

Essentially, the Omniverse stack is built for maximum flexibility and scalability. By building and leveraging Omniverse, developers can harness over 20 years of Nvidia technology in rendering, simulation, and AI. They can integrate it into their existing apps, or build their own apps and services.

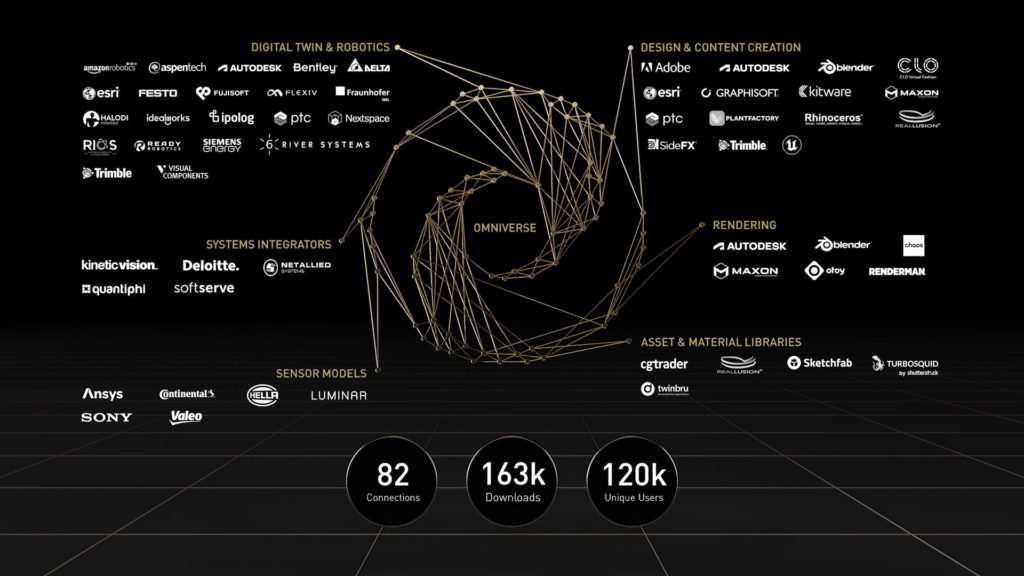

The Past, Present, and the Future of Omniverse

The Omniverse would not have been possible just a few years ago. Four leading technologies, according to Kerris, have come together to make the creation of the Omniverse possible: (1) RTX technology, which offers the ability to have real, true to reality simulation in real-time on a laptop all the way up to servers and even in the cloud; (2) the ability to scale these GPUs together so as to handle extensive data sets; (3) Universal Scene Description (USD), Pixar’s open-source 3-D description and file format; and (4) the AI revolution.

At present, 82 connectors have connected their asset libraries, applications, and more to the Omniverse. Kerris also shared that there have been over 160,000 downloads of Omniverse for individuals who are taking the product and using it in a wide variety of ways.

Looking to the future, Kerris is confident that the features and powerful capabilities of the Omniverse platform will transform the way we create.

“Whether it’s factories of the future, median entertainment, or visualization of landscapes we have yet to imagine, the Omniverse will transform the way we create,” Kerris concluded.