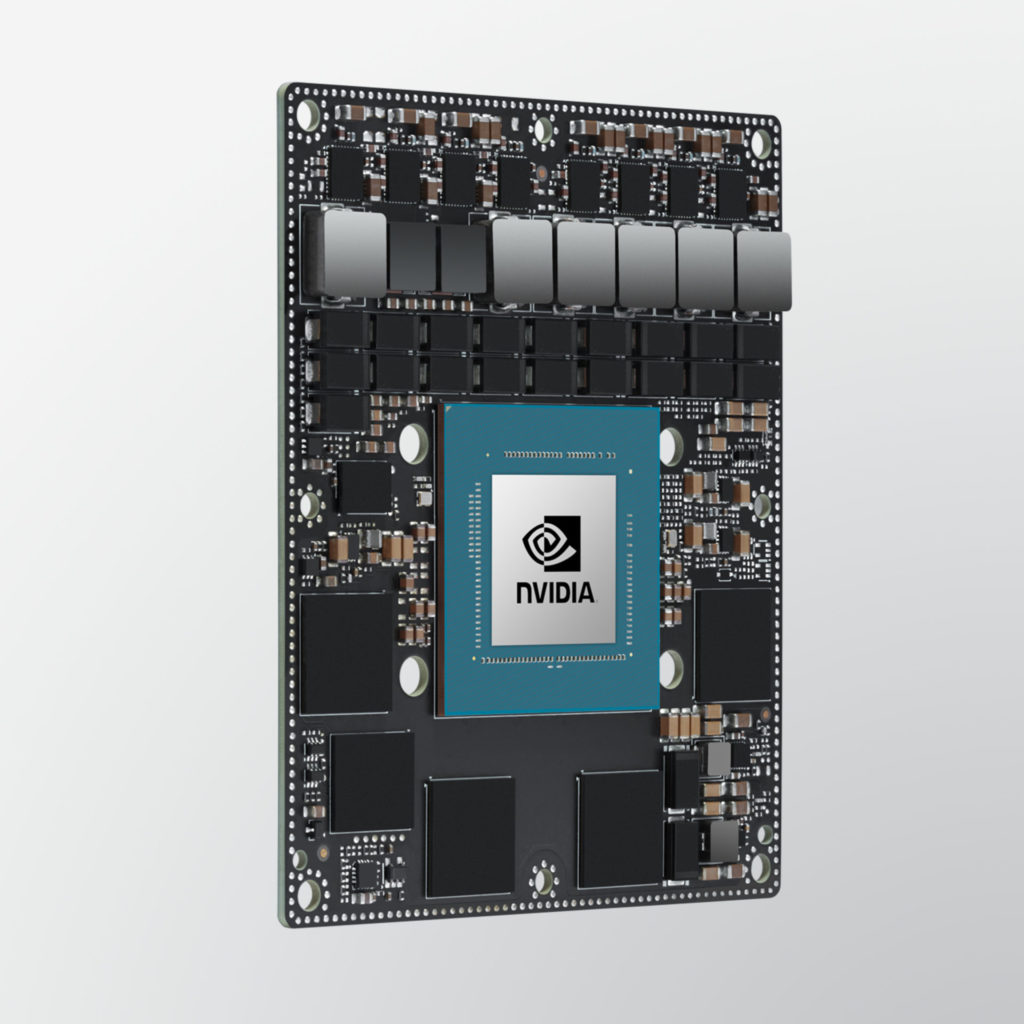

In its debut in the industry MLPerf benchmarks, NVIDIA Orin, a low-power system-on-chip based on the NVIDIA Ampere architecture, set new records in AI inference, raising the bar in per-accelerator performance at the edge.

Overall, NVIDIA with its partners continued to show the highest performance and broadest ecosystem for running all machine-learning workloads and scenarios in this fifth round of the industry metric for production AI.

In edge AI, a pre-production version of our NVIDIA Orin led in five of six performance tests. It ran up to 5x faster than our previous generation Jetson AGX Xavier, while delivering an average of 2x better energy efficiency.

NVIDIA Orin is available today in the NVIDIA Jetson AGX Orin developer kit for robotics and autonomous systems. More than 6,000 customers including Amazon Web Services, John Deere, Komatsu, Medtronic and Microsoft Azure use the NVIDIA Jetson platform for AI inference or other tasks.

Read the full blog post here: https://blogs.nvidia.com/blog/2022/04/06/mlperf-edge-ai-inference-orin/