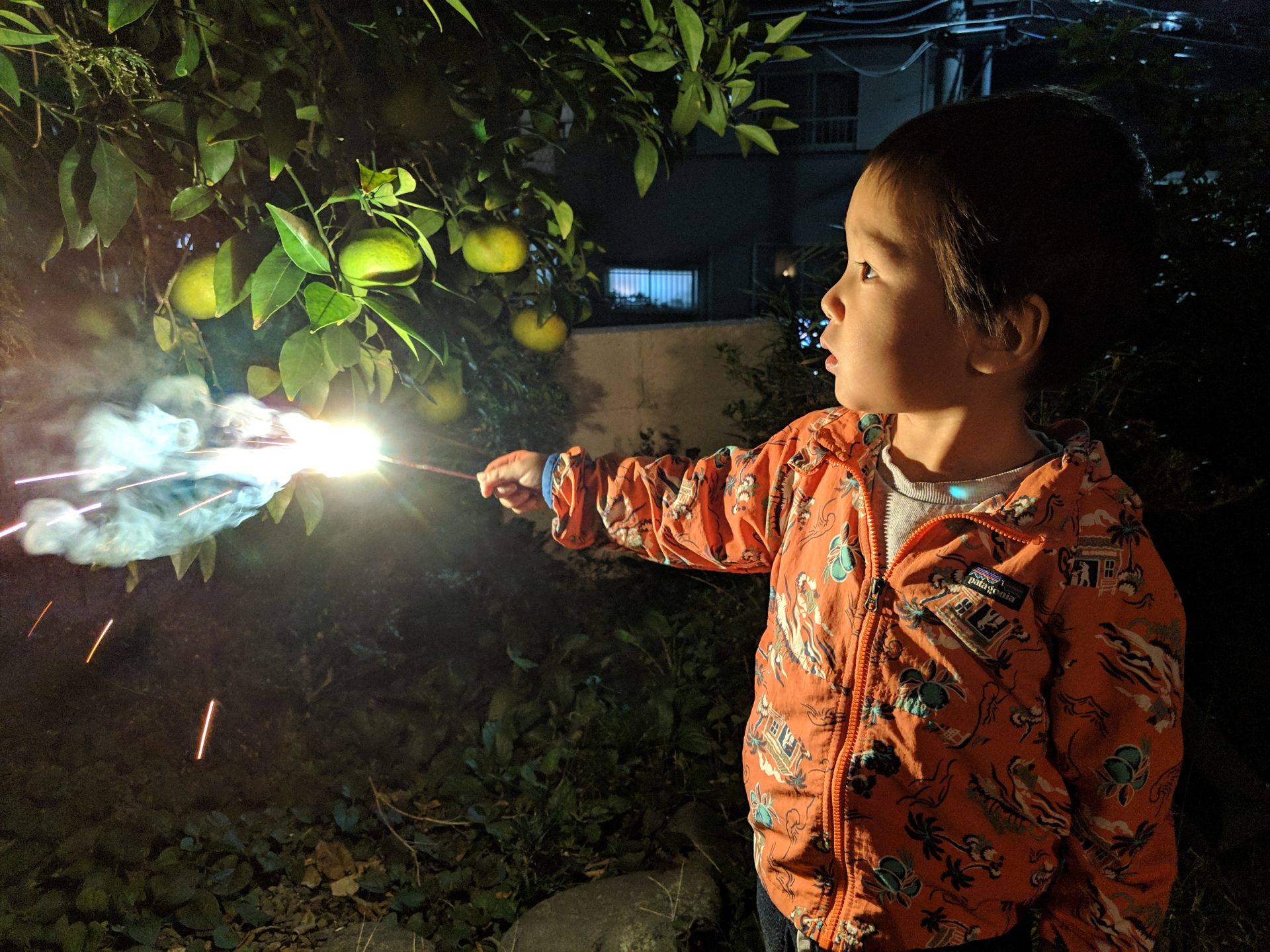

Night photography has always been troublesome for smartphone owners. Photos often turn out either too blurry or dark, and can ruin the capturing of the moment. With many smartphone manufacturers opting for more cameras in their devices, Google stuck with the software approach in an attempt to solve this issue. With their recent launch of the Pixel 3 and Pixel 3 XL, they finally released the long-awaited Night Sight which have been teased to us for ages.

We also had Marc Levoy, a Distinguished Engineer at Google who previously taught Digital Photography at Stanford University, who imparted his knowledge on how Night Sight worked on the Pixel 3.

What is Night Sight?

Google took their original HDR+ mode, which merges a burst of photos for an improved dynamic rage, and kicked it up a notch. Night Sight still does follow this basic principle, but now takes up to 15 photos with varying levels of exposure. This allows the device to brighten up darker portions of the scene, while preventing other sections from being overexposed. It would also mean that if you have a tripod, your photos can look amazing!

Problem #1 – Motion Blur

These do sound amazing in theory, but what about motion blur? That is where Motion Metering comes into the picture. It detects how shaky the phone is, as well as the movement of the subject, and tunes the exposure level of each frame accordingly. By shortening the exposure, there would be lesser motion blur. If both the device and the scene is stable, a longer exposure can grant an immense amount of detail in a really dark place. This, in addition to the Super Res Zoom‘s ability to reduce ghosting and noise, might just set it apart from Huawei’s Night Mode.

Do take note that the older Pixel 1 and Pixel 2 lacks the Super Res Zoom functionality that is present in the Pixel 3 lineup due to the lack of a better processor. Instead, they will use HDR+ for the merging of photos. Marc Levoy also briefly talked about the woes of aligning photos from multiple cameras, and how ghosting and artifacts often occurs when doing so.

Problem #2 – Unnatural Lighting

Now we come to the secret sauce of Night Mode. In many dark scenarios, photos will often turn out unnaturally tinted. As current methods of Auto White Balancing are not sufficient, Google took it to their AI department to develop what they call “learning-based AWB algorithm“. Instead of trying to manually guess the actual color temperature of the scene, machine learning is used to make the photo look more neutral. You can take a look at some samples from Google below, with the left being the original.

Problem #3 – Autofocus

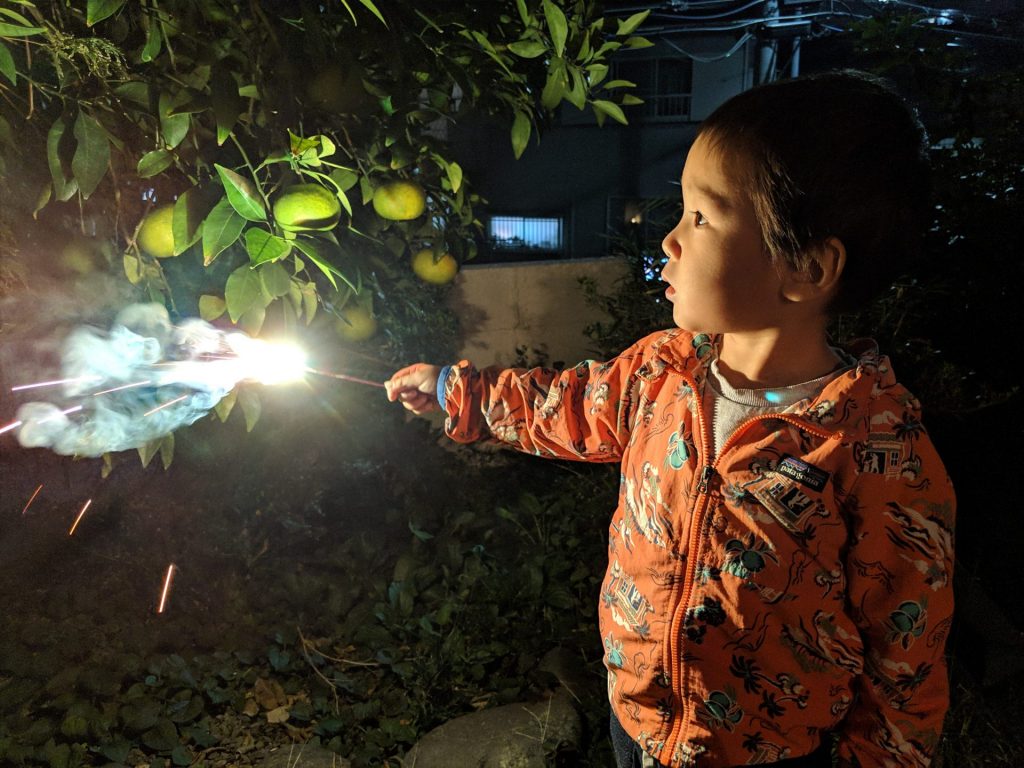

Another issue that is often faced when taking photos at night would be the autofocus simply outright failing. Unfortunately, even Google has not been able to make it work in places below 0.3 lux. The current solution they have is the two manual focus settings – “Near” and “Far” with the focus range being 4 feet (~1.22 meters) and 12 feet (~3.66 meters) respectively. At this stage, it is still not possible to tell if this is sufficient.

Bright future to come

With Google continually improving the software on their devices, while openly publishing their techniques to force quicker innovations, I am looking forward to what is to come for smartphone photography.

If you would like to read more into the subject, you can take a look at the brief overview, or a more in-depth write-up. You can also check out more comparison photos, and samples of Night Sight in action.