With Artificial Intelligence (AI) being more and more integrated into our daily lives, Google gave us the opportunity to sit in their very first Google AI MasterClass in Singapore. In the very first session, they briefly went through how they used Machine Learning (ML) to not only improve their services, but also to solve some of human’s biggest issues. We were also lucky to have Dr. Sergio Hernandez Marin, who has over 10 years of experience in the field of Machine Learning, in with us to share his insights into the whole deal.

Improve Existing Services

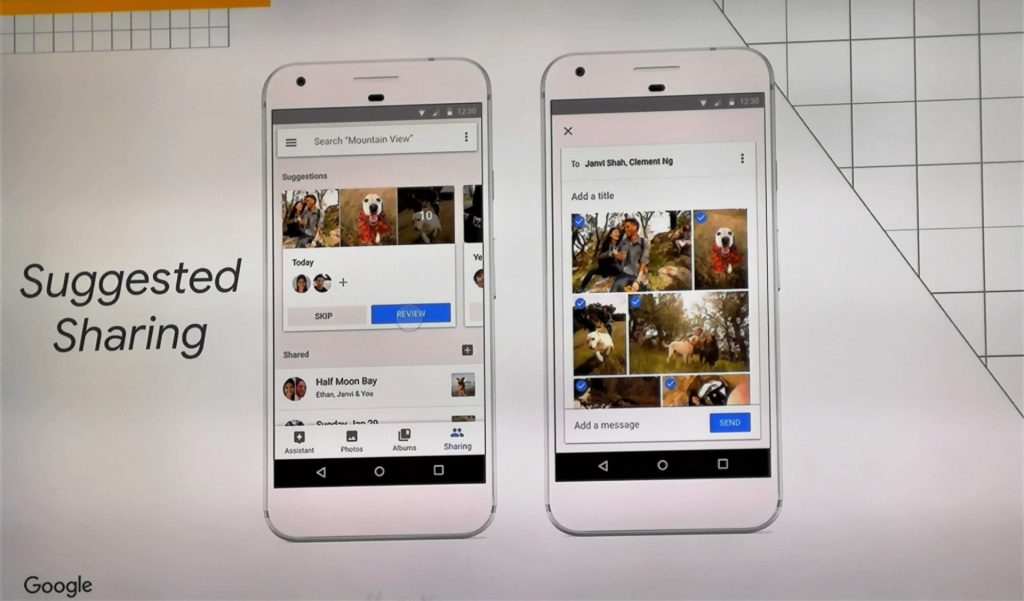

Unbeknownst to some, Google has been using AI to improve their current applications. For starters, Google Photos has been using and iterating over the AI that has been behind the scenes of this application. Powered by the field of Computer Vision, the app has been able to help organize massive collections of photos and memories. Photos are now automatically categorized based on metadata, and most importantly through recognizing the context within them. This allows for the suggestion of libraries, sharing, as well as enable searching without needing to manually tag each and every one of your photos taken.

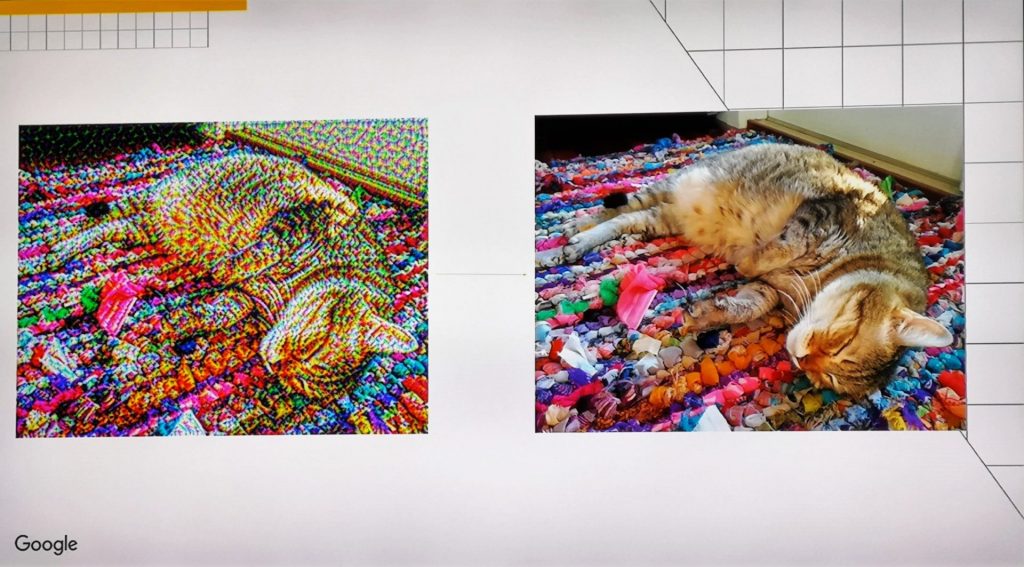

Dr. Sergio also shared with us how Google has an image classification model, which uses the inception technique that places networks within neural networks. This technique adds up to a complex model that has up to 22 layers in total.

Not to mention, Google opened up their AI capabilities to business through the likes of TensorFlow and hardware-accelerated components like their very own Tensor Processing Unit (TPU).

Better Lives

It does not stop with just businesses and consumers. They also dabbled into the real-world usage in healthcare and even into animal/plant research.

Take diabetic retinopathy, a diabetic complication, for example. With over 415 million people affected in the world, it has been one of the leading causes of blindness. To prevent this, it has to be identified in the early stages. To help contribute in this field, Google applied their knowledge into a model that is capable of identifying the early stages of diabetic retinopathy. Not to mention, its success rate was close to a specialist, while having a lower error rate than generalists. To achieve this, Google had to use the large number of retinal photographs available.

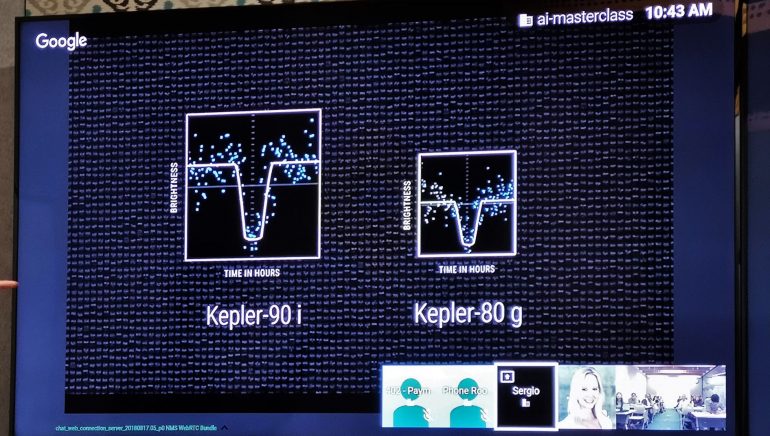

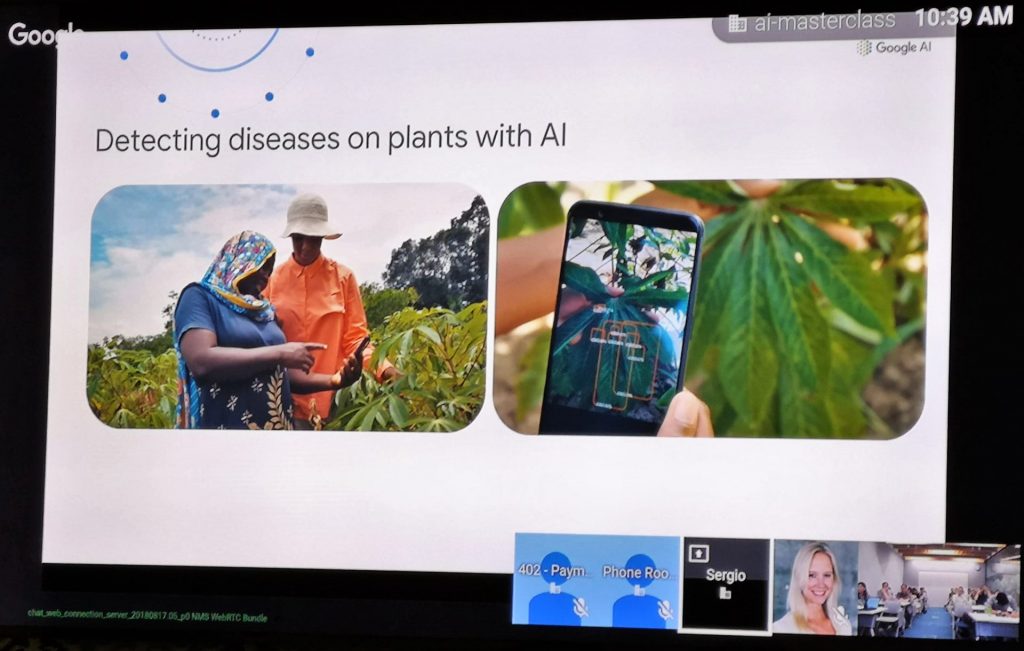

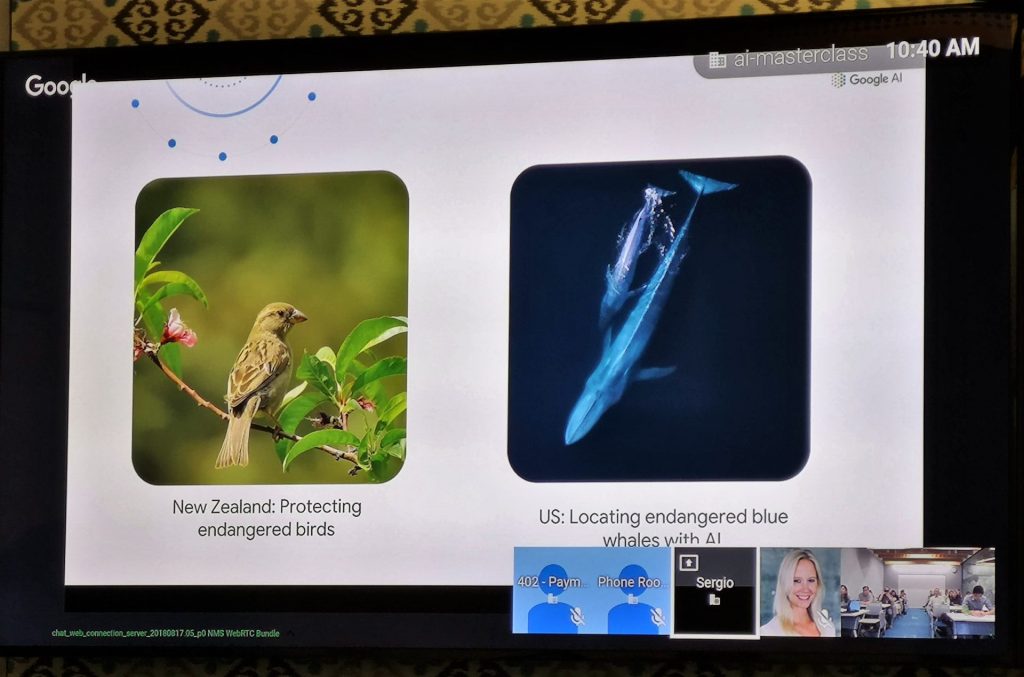

In literal fields, this method has been used to help protect plants from diseases as well as track species of animals without being as intrusive as embedding physical trackers. There have also been cases where Deep Learning was used to identify planets in the Kepler system.

Where will it all go?

It is clear that the field of Machine Learning is still relatively new as compared to many others. However, what is obvious is that it has been useful for countless areas that it has been implemented on. Let us hope it leads to somewhere good, and not be our undoing.