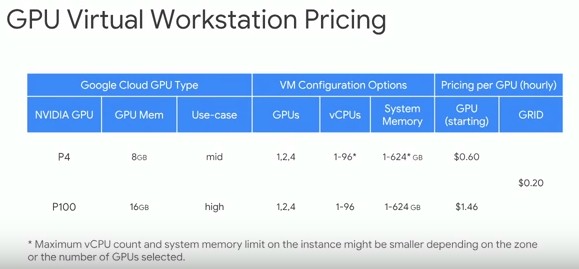

Google just announced that they will be integrating NVIDIA’s Tesla P4 GPUs onto the Google Cloud Service platform. These Tesla GPUs will be dedicated to processing Artificial Intelligence (AI) inference tasks and will be made available in the U.S. and Europe Google Cloud servers.

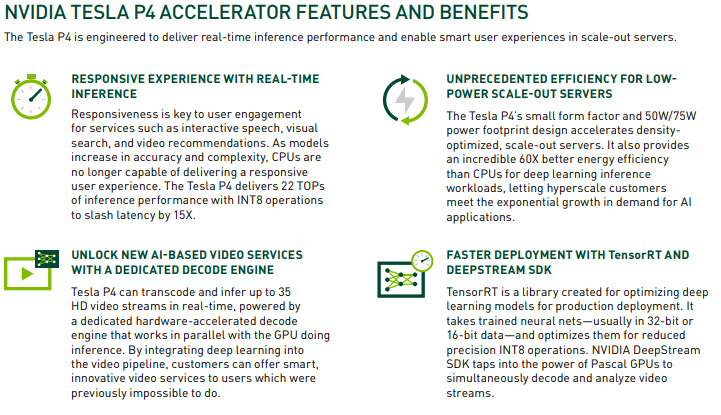

Even though the Tesla P4 was launched in 2016, it is still a very relevant product today. This is because the Pascal architecture is still one of the most effective in the market. The fact that Google is only just adopting the Tesla P4 for their new compute servers speaks a lot on the product. The decision for Google to integrate the P4s signals a tipping point in customer demand for the extremely efficient NVIDIA GPU-accelerated inferencing platform.

The Tesla P4s can also be used for a variety of workloads despite it being branded as a AI inference engine. These includes applications on virtual workstations, visualization, and video transcoding, making the P4 a good choice for the public cloud as well. The Tesla P4 GPUs can also be attached to any Compute Engine instance to create virtual workstations.

According to Paresh Kharya, head of product marketing for Accelerated Computing at NVIDIA,

“Our Tesla P4 GPUs offer 40X faster processing of the most complex AI models to enable real-time AI-based services which require extremely low latency, such as understanding speech and live video and handling live translations. P4 GPUs deliver inference with just 1.8 milliseconds of latency at batch size 1, and consume just 75 watts of power to accelerate any scale-out server, offering best-in-class energy efficiency.”

While every major cloud provider already offer’s NVIDIA’s flagship V100 Tensor Core GPUs, NVIDIA still lacks a product that directly replaces the P4. It will be interesting if NVIDIA decides to release a Tesla V4 based on the Volta architecture.

Source: NVIDIA,TheNextPlatform