NVIDIA has announced Rubin CPX, a new class of GPU designed for massive-context AI processing, enabling workloads such as million-token software coding and generative video with unprecedented efficiency and speed.

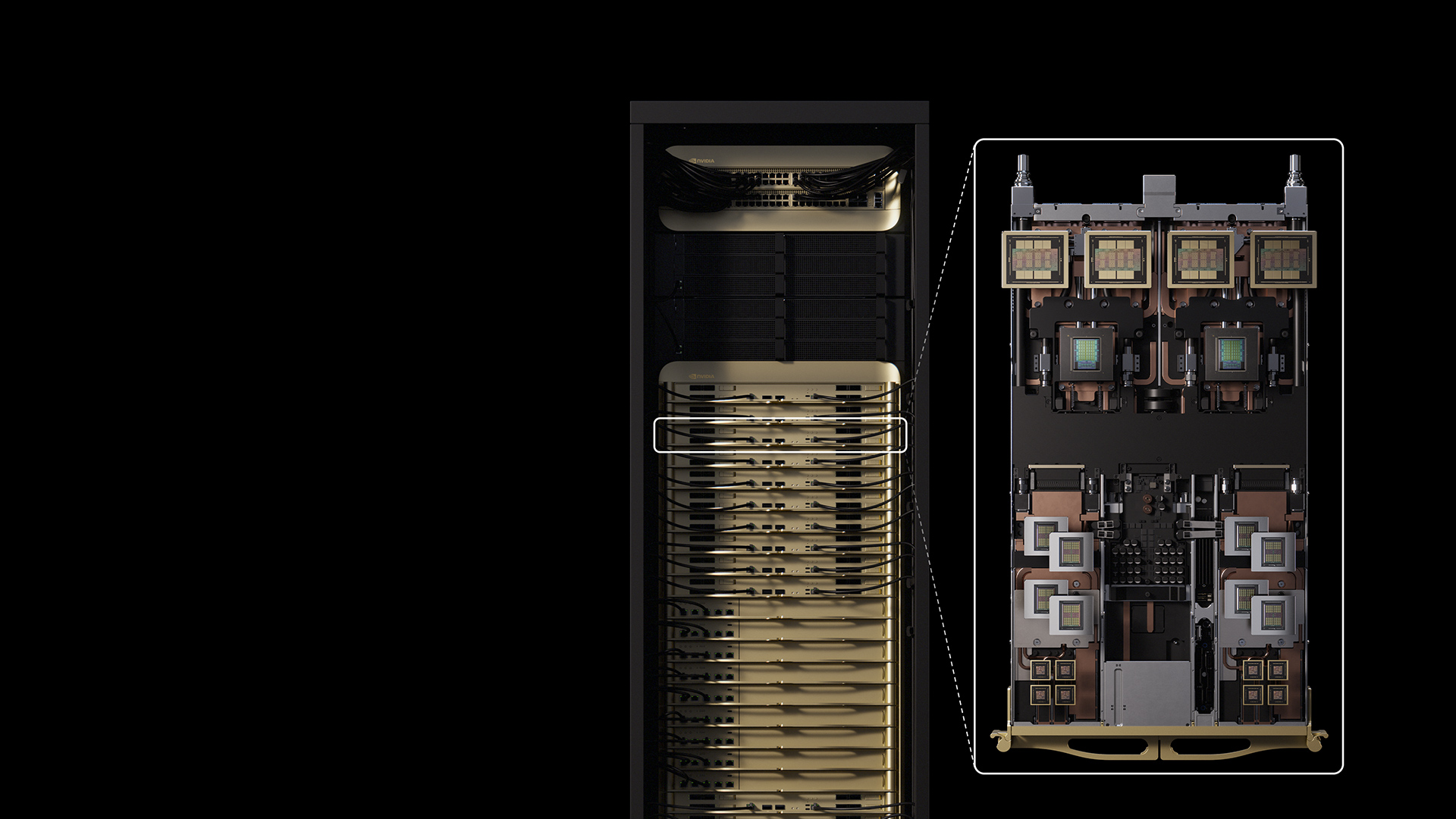

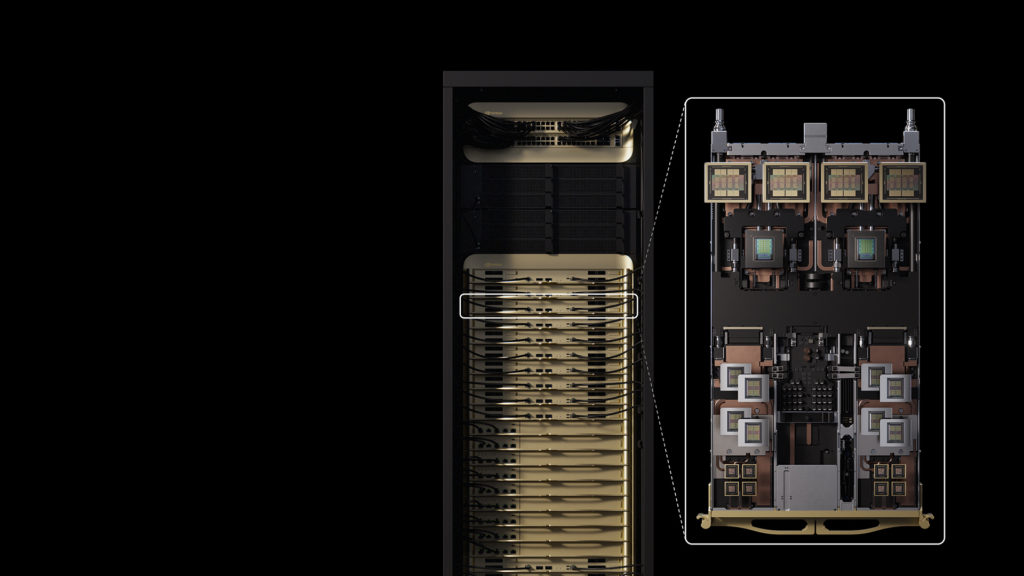

Rubin CPX will debut as part of the Vera Rubin NVL144 CPX platform, where it works alongside NVIDIA Vera CPUs and Rubin GPUs, and the system delivers 8 exaflops of AI compute in a single rack, equipped with 100TB of fast memory and 1.7 petabytes per second of bandwidth, with official sources claims it achieves 7.5x the AI performance of its GB300 NVL72 systems.

Rubin CPX will debut as part of the Vera Rubin NVL144 CPX platform, where it works alongside NVIDIA Vera CPUs and Rubin GPUs, and the system delivers 8 exaflops of AI compute in a single rack, equipped with 100TB of fast memory and 1.7 petabytes per second of bandwidth, with official sources claims it achieves 7.5x the AI performance of its GB300 NVL72 systems.

The hardware integrates video decoders, encoders, and long-context inference directly on the GPU, supporting applications like video search and generative video that can demand up to one million tokens for an hour of content. The GPU itself delivers up to 30 petaflops of NVFP4 compute, features 128GB of GDDR7 memory, and provides 3x faster attention capabilities compared to GB300 NVL72 systems.

Industry partners are already aligning with Rubin CPX. Cursor plans to integrate the GPU to accelerate code generation in its AI-driven development environment. Runway will adopt it to scale generative video production for cinematic content and visual effects, while Magic expects the GPU to handle 100-million-token context windows for autonomous software engineering agents.

Rubin CPX will be supported across the NVIDIA AI stack, including the Dynamo inference platform, Nemotron multimodal models, and NVIDIA AI Enterprise with NIM microservices. It also extends into the broader CUDA-X ecosystem with more than 6 million developers and nearly 6,000 CUDA applications.

NVIDIA Rubin CPX is expected to be available by the end of 2026.