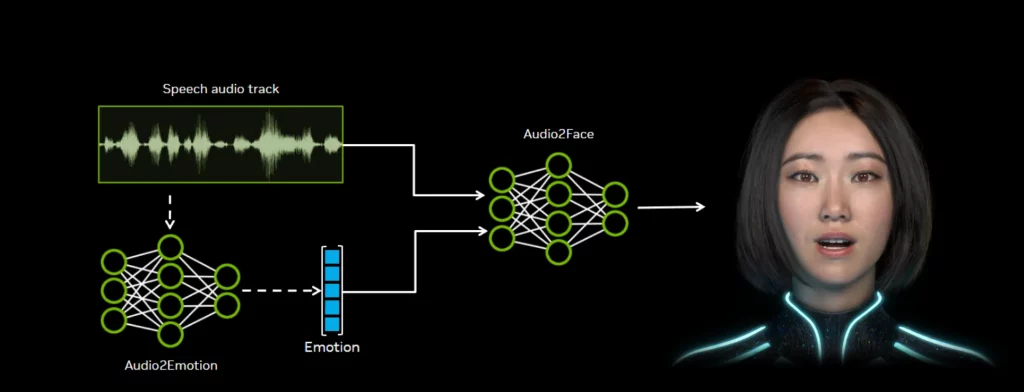

NVIDIA is opening up its Audio2Face technology, releasing the models, SDK, and training framework to the public in a bid to accelerate the adoption of AI-powered avatars across gaming, media, and customer service. Audio2Face uses generative AI to transform audio input into realistic facial animations, enabling accurate lip-sync and expressive emotions for digital characters.

The system works by analyzing phonemes and intonation from speech, then generating animation data that can be mapped onto a character’s facial poses. This data can be applied to pre-scripted content or streamed in real time, allowing for dynamic, interactive avatars. By open-sourcing both the technology and the tools to train custom models, Team Green is inviting developers, students, and researchers to build on its foundation and adapt it for diverse use cases.

The system works by analyzing phonemes and intonation from speech, then generating animation data that can be mapped onto a character’s facial poses. This data can be applied to pre-scripted content or streamed in real time, allowing for dynamic, interactive avatars. By open-sourcing both the technology and the tools to train custom models, Team Green is inviting developers, students, and researchers to build on its foundation and adapt it for diverse use cases.

Several industry players have already deployed Audio2Face in production, with Reallusion having integrated the tool into its 3D character platform, enhancing workflows with iClone, Character Creator, and AccuLip. Game studios are also leveraging the technology: Survios streamlined facial capture in Alien: Rogue Incursion Evolved Edition, while The Farm 51 used it in Chernobylite 2: Exclusion Zone to cut animation time and deliver more authentic character performances.

Other adopters include Convai, Codemasters, GSC Games World, Inworld AI, NetEase, Perfect World Games, Streamlabs, and UneeQ Digital Humans.

By open sourcing Audio2Face, NVIDIA is aiming to foster a community-driven ecosystem around AI facial animation. Developers are encouraged to join its dedicated Discord community to collaborate and share advancements, as the company looks to make lifelike digital characters more accessible across industries.