Training and deploying your own AI model could be an arduous task. With the democratization of AI, NVIDIA has made AI usage and deployment so much easier. Even a software developer without any knowledge in AI will be able to make use of NVIDIA’s latest micro-services to achieve what they want with the pre-trained AI models provided.

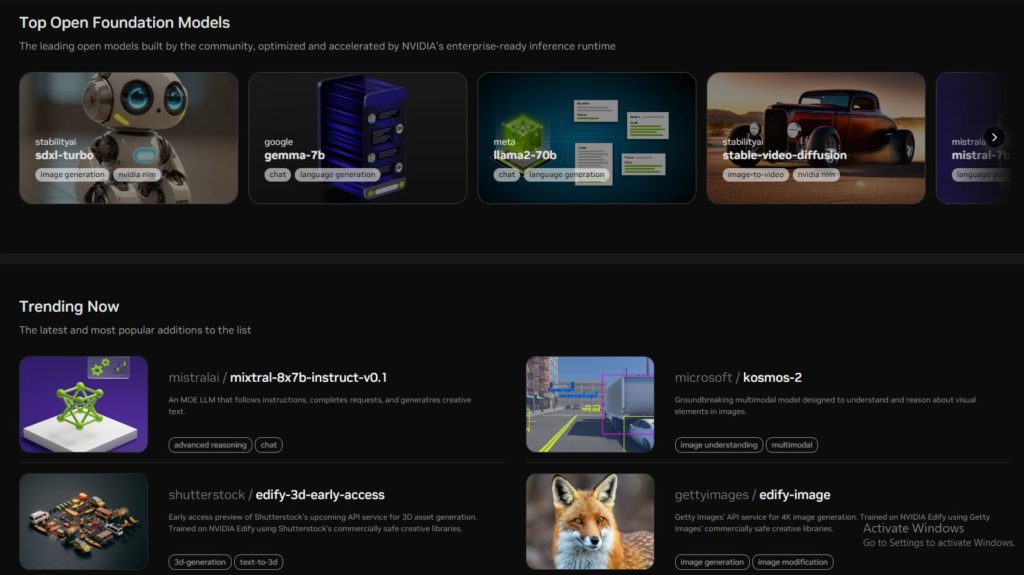

At GTC 2024, NVIDIA launched dozens of enterprise-grade generative AI microservices that businesses can use. These include NVIDIA NIM, CUDA-X and NeMo Retrieval microservices.

NVIDIA NIM (NVIDIA Inference Microservices) is a platform designed to streamline the deployment of AI models at scale. It provides optimized inference microservices, which are lightweight, containerized units that enable efficient and scalable deployment of AI models.

NVIDIA NIM simplifies the deployment process, allowing developers and data scientists to focus on building and optimizing AI models rather than managing complex infrastructure.

NVIDIA NIM seamlessly integrates with Kubernetes, a popular container orchestration platform, to provide a scalable and flexible infrastructure for deploying AI models. It also supports multiple deep learning frameworks, including TensorFlow, PyTorch, and ONNX, ensuring compatibility with a wide range of AI models.

Some of the use cases of NVIDIA SIM that are made available are real-time image recognition, natural language processing, and recommendation systems. This demonstrates its versatility across different AI applications.

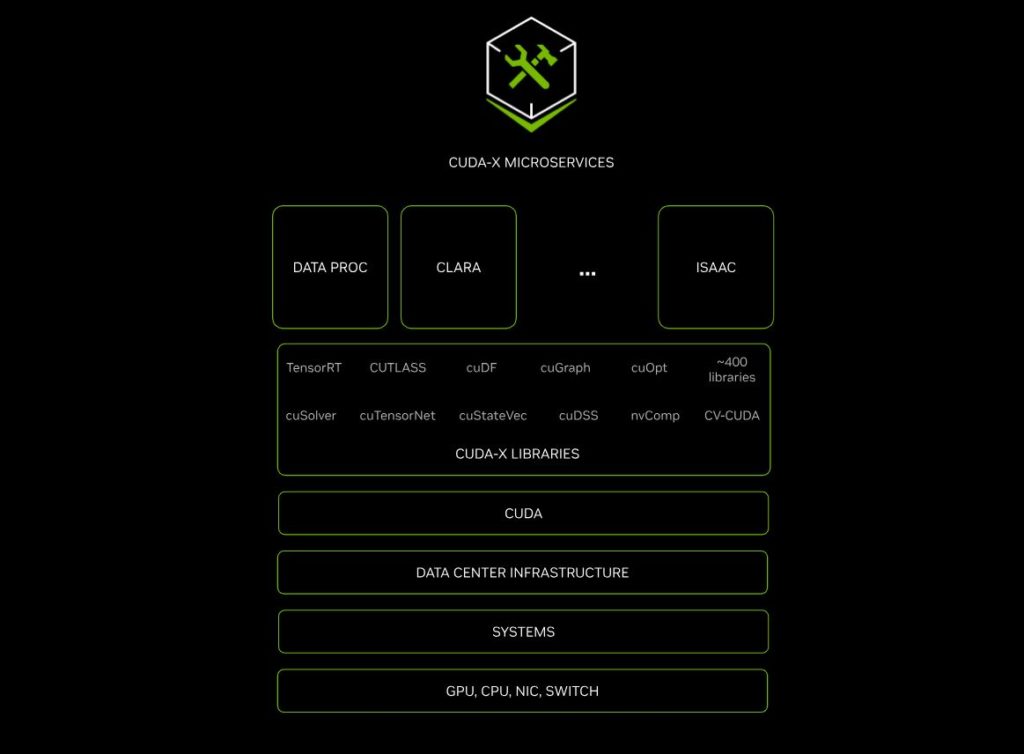

CUDA-X Microservices on the other hand, are developer tools, GPU-accelerated libraries, and technologies packaged as cloud APIs. They are easy to integrate, customize, and deploy in data processing, AI, and HPC applications.

CUDA-X microservices include NVIDIA® Riva for customizable speech and translation AI, NVIDIA Earth-2 for high-resolution climate and weather simulations, NVIDIA cuOpt™ for routing optimization and NVIDIA NeMo™ Retriever for responsive retrieval-augmented generation (RAG) capabilities for enterprises.

NeMo Retriever microservices let developers link their AI applications to their business data — including text, images and visualizations such as bar graphs, line plots and pie charts — to generate highly accurate, contextually relevant responses. With these RAG capabilities, enterprises can offer more data to copilots, chatbots and generative AI productivity tools to elevate accuracy and insight.

“The usage of microservices could speed up deployments from weeks to minutes. Established enterprise platforms are sitting on a goldmine of data that can be transformed into generative AI copilots,” said Jensen Huang, founder and CEO of NVIDIA. “Created with our partner ecosystem, these containerized AI microservices are the building blocks for enterprises in every industry to become AI companies.”

Availability

Developers can experiment with NVIDIA microservices at ai.nvidia.com at no charge. Enterprises can deploy production-grade NIM microservices with NVIDIA AI Enterprise 5.0 running on NVIDIA-Certified Systems and leading cloud platforms.

For more information, watch the replay of Huang’s GTC keynote and visit the NVIDIA booth at GTC, held at the San Jose Convention Center through March 21.