In our introduction article on the NVIDIA Jetson Nano, we covered what was inside the developer kit, as well as some key specifications of the Single Board Computer. With such an impressive hardware, the Jetson Nano also has heavy responsibilities. In the second part of the series, we are going to run a few tests and demos to understand just how capable the NVIDIA Jetson Nano is.

Maxwell Architecture

If you take a closer look at the Jetson Nano, you will realise that its GPU is based on the Maxwell architecture. This architecture is not particularly new. In fact, it was first introduced in 2014 with some consumer graphics card. The second-generation Maxwell architecture is also the basis for the GeForce GTX 980 graphics card. However, please don’t be confused that the Jetson Nano has the same performance as the GTX 980. The Jetson Nano comes with 128 CUDA Cores, while the GTX 980 has 16 times more at 2048 CUDA Cores.

A quick Introduction to Artificial Neural Networks

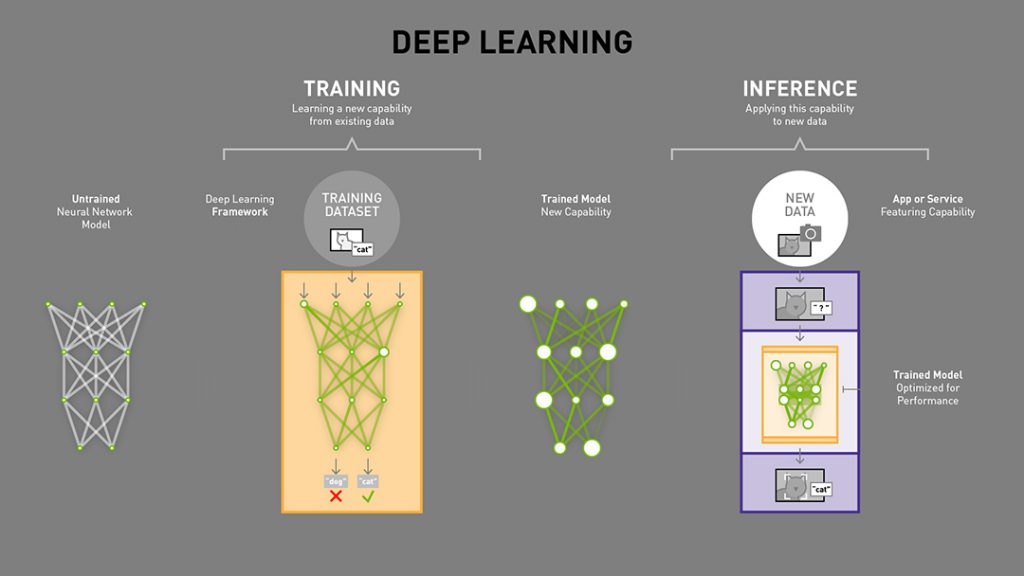

AI computations come in two parts for Machine Learning (Neural Networks specifically), training and inference.

The compute heavy portion comes during training, where a “Trained Model” is built by multiple iterations of learning. Artificial Neural Networks is developed based on how a human brain works, and when learning/training takes place, the neural network literally learns and gets better at coming to conclusion. Even though they may not be 100% perfect, just like a human brain, it can reach a certain level of accuracy. Such trained models can then be transferred and “installed” to inference engines, where conclusions can be made directly.

The NVIDIA Jetson Nano is designed and engineered to take the trained model and quickly come out with conclusions with inference. Therefore, it does not need too much compute performance, nor does it “learn”. The heavy lifting work for training of new neural network models have already been done elsewhere. The only difference the NVIDIA Jetson Nano brings to the table is its support for CUDA (Compute Unified Device Architecture). CUDA is a parallel programming framework by NVIDIA, which makes use of the GPU for parallel computation. Since most basic CPU can only have limited capabilities when it comes to parallel computing, GPUs can do it very well. NVIDIA has capitalized on this aspect of their GPUs and developed an AI industry for themselves.

In fact, most AI and Machine Learning frameworks available right now will support CUDA for accelerated training and inference. Moreover, since GeForce graphics card are made for consumers, they can even be quite available for mass users. The Jetson Nano will therefore, excel when it comes to Inference on trained models, because it has a CUDA-supported GPU to hugely accelerate such a process. In other Single Board Computers out in the market, such as the Raspberry Pi, they simply aren’t made for such compute scenarios.

With CUDA, NVIDIA has also opened up the Jetson Nano with a rather large support for AI software libraries and Software Development Kits (SDK). This is also why the Jetson Nano supports the most popular deep learning frameworks, such as Tensor Flow, PyTorch, MxNet, Keras and Caffe.

NVIDIA DeepStream SDK Demo

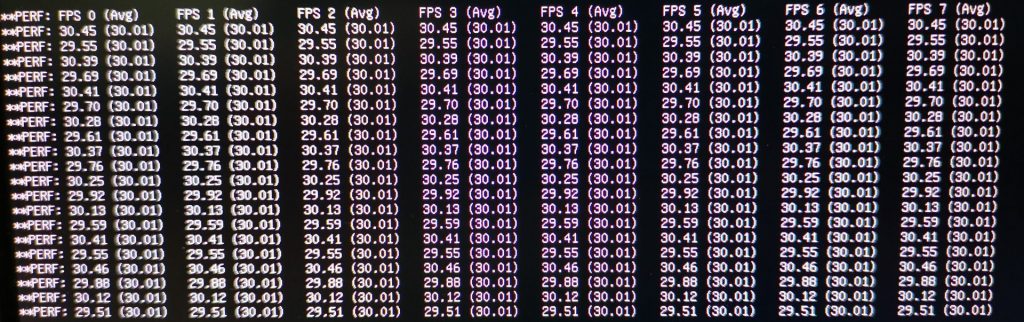

In the NVIDIA DeepStream SDK demo, the Jetson Nano showed us the true capabilities of what GPU can do when processing live videos and images. NVIDIA DeepStream SDK is a set of software and tools for developers who wants to do video analytics applications at a larger scale. In the example, with the demo, we saw that the Jetson Nano was able to process eight concurrent video streams with ease. These 8 video streams are all at 1080p resolutions at 30FPS. Incredibly, we saw that there was no drop in framerates when using neural network inference techniques on each of these video streams with the Jetson Nano. The Jetson Nano was able to draw binding boxes on the various objects within all the 8 video feeds at once. Definitely impressive!

Even with constant processing on the video streams, there was almost no drop in frame rates on the videos at all. This shows how well the Jetson Nano was able to handle such workloads with the DeepStream SDK.

Performance Benchmarks

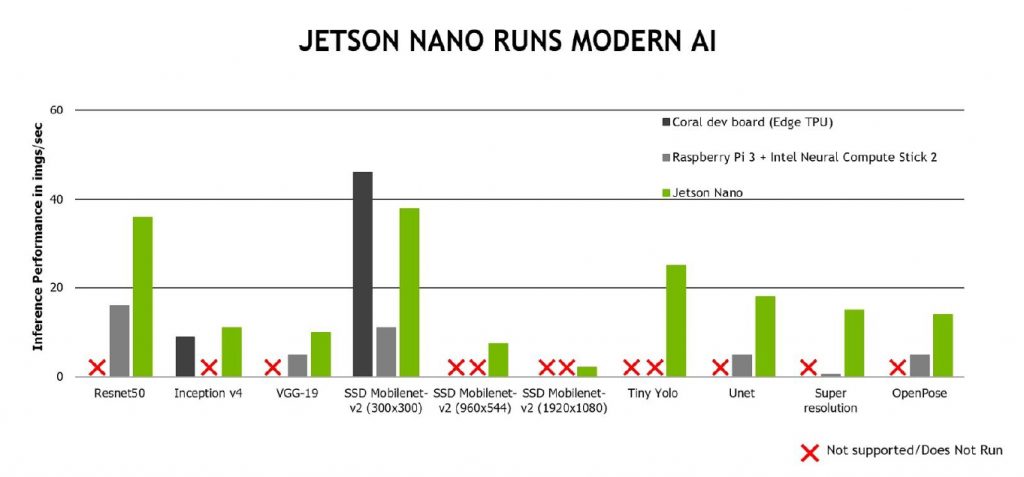

There has been efforts in the industry to bring AI compute capabilities to Single Board Computers. Specifically, Intel has developed a Neural Compute Stick for the Raspberry Pi for it to run neural network inferences with some form of acceleration. However, we saw that such a solution resulted in rather poor results, probably also due to the low bandwidth of the USB 2.0 interface that it utilizes. Even though it does bring some form of acceleration to the Raspberry Pi, we still think that such a solution seems rather lacking, as the Raspberry Pi is not purpose built to be used in such a manner in the first place.

On the other hand, the COREL Dev Board was also recently introduced in the market. It utilizes an Application Specific Integrated Circuit (ASIC) for neural network processing. However, being an ASIC also means that it would not be compatible with many models. As such, even though there are times where the Coral dev board works better than the Jetson Nano, most of the other times it couldn’t run at all.

Conclusion

Going through the applications and performance of the NVIDIA Jetson Nano, we can clearly see where this product stand in the market. In fact, being considerably inexpensive, the Jetson Nano could open up many possibilities to developers who are exploring the deployment of edge AI computing.

In our next article, we will take a look at how we can take advantage of the NVIDIA Jetson Nano to develop some useful AI Applications.

Until now deep-stream sdk for nano hasn’t been released in the Nvidia website. Can you please share which sdk you used.