Expansion Comes with Public Beta of NVIDIA T4 GPUs on Google Cloud Platform.

SINGAPORE—January 17, 2019—NVIDIA and Google today announced that NVIDIA Tesla T4 GPUs are available in a public beta launch to Google Cloud Platform customers in more regions around the world, including for the first time Brazil, India, Japan, and Singapore.

“The T4 joins our NVIDIA K80, P4, P100, and V100 GPU offerings, providing customers with a wide selection of hardware-accelerated compute options. The T4 is the best GPU in our product portfolio for running inference workloads. Its high-performance characteristics for FP16, INT8, and INT4 allow you to run high-scale inference with flexible accuracy/performance tradeoffs that are not available on any other accelerator,” said Chris Kleban, product manager, Google Cloud.

NVIDIA T4 GPUs are designed to accelerate diverse cloud workloads, including high-performance computing, deep learning training and inference, machine learning, data analytics, and graphics. NVIDIA T4 is based on NVIDIA’s new Turing architecture and features multi-precision Turing Tensor Cores and new RT Cores.

Each T4 is equipped with 16GB of GPU memory, delivering 260 TOPS of computing performance.

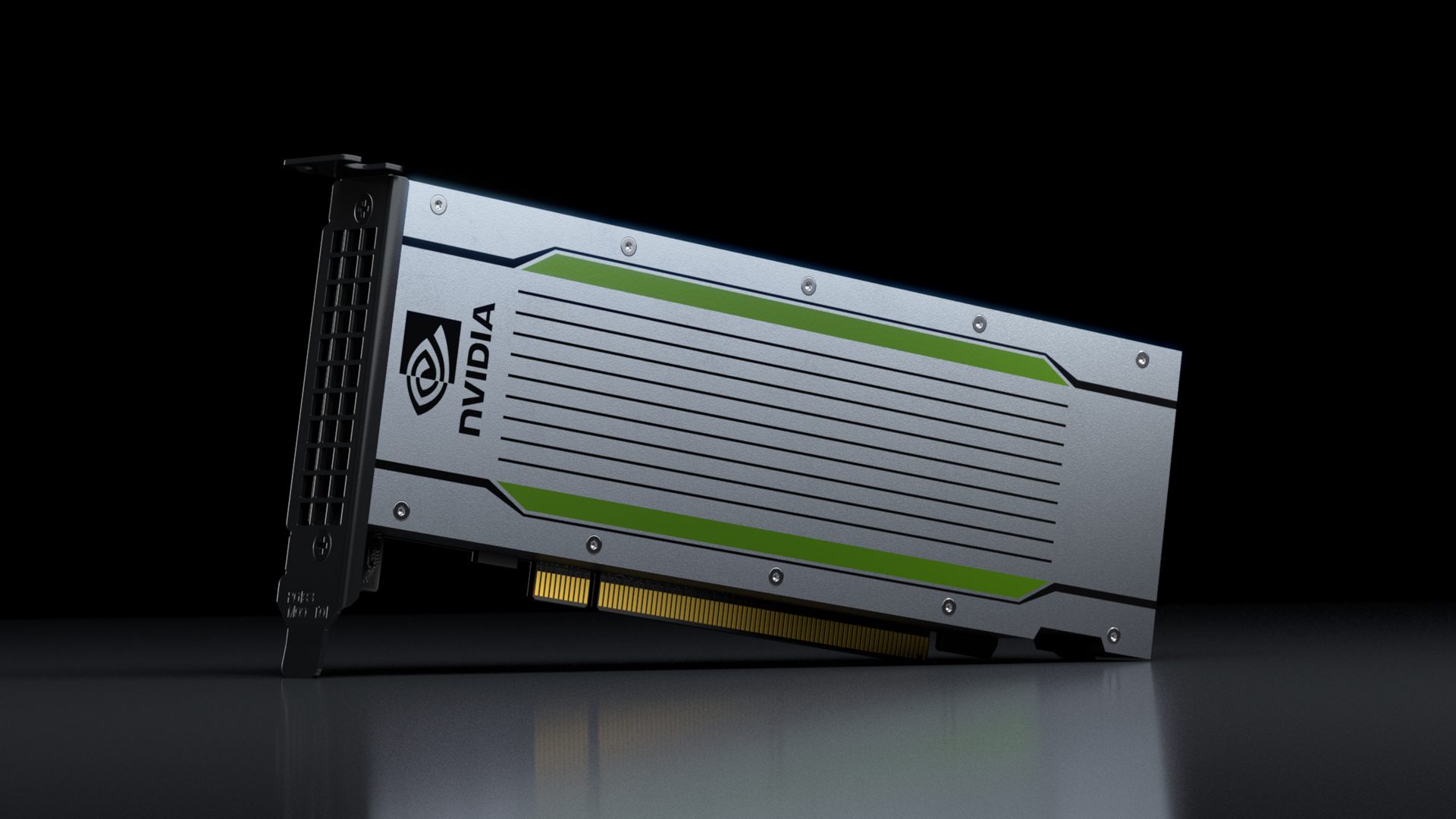

On the Google Cloud Platform, the new T4 GPUs (above) can be used for as low as US$0.29 per hour per GPU on Preemptible VM instances. “On-demand instances start at US$0.95 per hour per GPU, with up to a 30 percent discount with sustained use discounts,” Kleban said.

The Turing architecture introduces real-time ray tracing that enables a single GPU to render visually realistic 3D graphics and complex professional models with physically accurate shadows, reflections, and refractions. Turing’s RT Cores accelerate ray tracing and are leveraged by systems and interfaces, such as NVIDIA’s RTX ray-tracing technology, and APIs such as Microsoft DXR, NVIDIA OptiX™, and Vulkan ray tracing to deliver a real-time ray tracing experience. Google is also supporting virtual workstations on the T4 instances, enabling designers and creators to run the next generation of rendering applications from anywhere and on any device.

The Google Cloud AI team also published an in-depth technical blog to help developers make the most out of T4 GPUs and the NVIDIA TensorRT platform. In this post, the team describes how to run deep learning inference on large-scale workloads with NVIDIA TensorRT 5 running on NVIDIA T4 GPUs on the Google Cloud Platform.

An ideal place to download software to run on the new T4 instance type is NGC, NVIDIA’s catalogue of GPU-accelerated software for AI, machine learning, and HPC. NGC features a large variety of ready-torun containers with GPU-optimised software such as the TensorFlow AI framework, RAPIDS for accelerated data science, the above-mentioned NVIDIA TensorRT and ParaView with NVIDIA OptiX, and much more

In November, Google Cloud was the first cloud vendor to offer the next-generation NVIDIA T4 GPUs via a private alpha, shown by NVIDIA CEO Jensen Huang on stage at SC 2018.

Users can begin using the T4 GPUs today.

Keep Current on NVIDIA

Subscribe to the NVIDIA blog, follow us on Facebook, Twitter, LinkedIn and Instagram, and view NVIDIA videos on YouTube.

About NVIDIA

NVIDIA’s (NASDAQ: NVDA) invention of the GPU in 1999 sparked the growth of the PC gaming market, redefined modern computer graphics and revolutionised parallel computing. More recently, GPU deep learning ignited modern AI — the next era of computing — with the GPU acting as the brain of computers, robots and self-driving cars that can perceive and understand the world. More information at http://nvidianews.nvidia.com/.