Despite most people already having some form of bias in NVIDIA systems being the best in AI inferencing but hey, at least the new results from MLPerf proofed that they are.

The official announcement came after NVIDIA TensorRT-LLM operated within NVIDIA Hopper GPUs running inferences based on the GPT-J LLM has achieved nearly 3 times better results than the one made just half a year ago.

And yes I know, Blackwell will be breaking this number in the future but we still gotta give Hopper the due credits it deserves because the H200 Tensor Core GPUs achieved that kind of result in the latest benchmark based on the 70B parameter Llama 2 model that is already 10x larger than GPT-J LLM!

Even then, TensorRT-LLM is capable of producing up to 31K tokens per second which is inclusive of a 14% uplift thanks to a custom thermal solution that allows better thermal overhead for stronger overall performance.

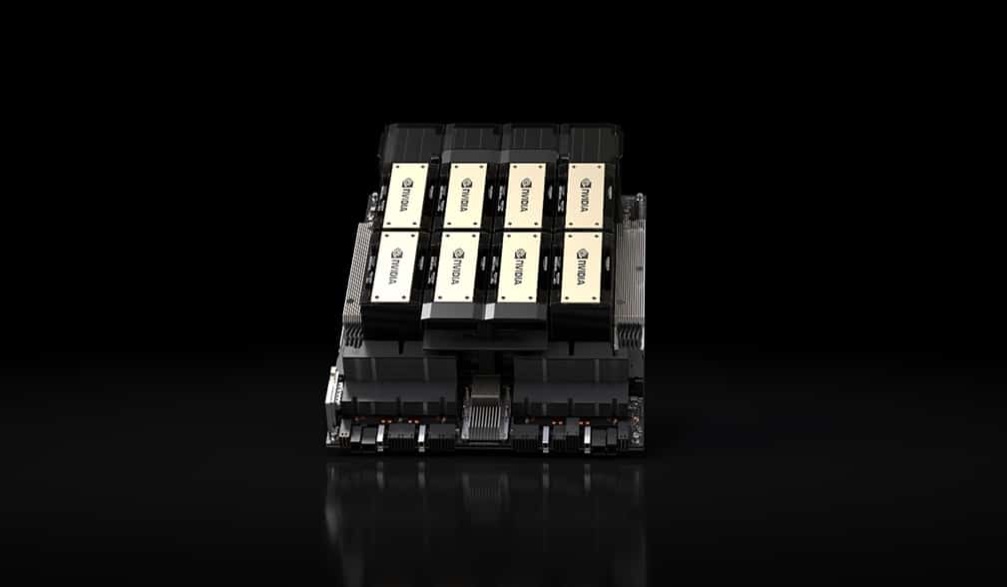

With 141GB of HBM3e running at 4.8TB/s which is 76% higher in capacity and 43% faster than H100, H200 is so powerful that the entire Llama 2 model can be executed and inference in just a single unit alone. And don’t forget it can be combined with NVIDIA Grace CPUs to form the GH200 Superchip, achieving even greater power.

For more info on how NVIDIA is doing this, there is more information available on the official blog post.